目录

|

序号 |

操作系统 |

版本 |

用途 |

|

1 |

RHEL |

6.6-x86_64 |

Rac节点1, DNS服务器,YUM服务器 |

|

2 |

RHEL |

6.6-x86_64 |

Rac节点2 |

|

3 |

Openfiler |

2.99.1-x86_64 |

共享存储服务器 |

|

节点 |

Hostname |

Type |

IP Address |

Interface |

Resolved by |

|

rac1 |

rac1 |

Public IP |

192.168.230.129 |

eth0 |

HOST FILE |

|

rac1-vip |

Virtual IP |

192.168.230.139 |

eth0:1 |

HOST FILE |

|

|

rac1-priv |

Private IP |

172.16.0.11 |

eth1 |

HOST FILE |

|

|

rac2 |

rac2 |

Public IP |

192.168.230.130 |

eth0 |

HOST FILE |

|

rac2-vip |

Virtual IP |

192.168.230.140 |

eth0:1 |

HOST FILE |

|

|

rac2-priv |

Private IP |

172.16.0.12 |

eth1 |

HOST FILE |

|

|

rac-scan |

SCAN IP |

192.168.230.150 |

eth0:2 |

HOST FILE,DNS |

|

|

openfiler |

openfiler |

|

192.168.230.136 |

eth0 |

HOST FILE |

|

序号 |

软件组件 |

用户 |

主组 |

次要组 |

Base_home |

Oracle_home |

|

1 |

Grid infrastructure |

grid |

oinstall |

asmadmin asmdba asmoper |

/u01/app/grid |

/u01/app/11.2.0/grid |

|

2 |

Oracle RAC |

oracle |

oinstall |

asmdba dba oper |

/u01/app/oracle |

/u01/app/oracle/product/11.2.0/dbhome_1 |

|

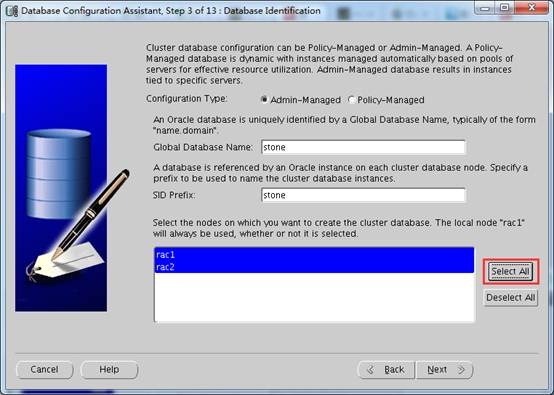

序号 |

节点 |

实例名称 |

数据库名称 |

|

1 |

rac1 |

stone 1 |

stone |

|

2 |

rac2 |

stone 2 |

|

序号 |

用途 |

文件系统 |

大小 |

名称 |

冗余方式 |

Openfiler卷名 |

|

1 |

数据盘 |

ASM |

20G |

+DATA |

External |

Lv1 |

|

2 |

恢复盘 |

ASM |

8G |

+FRA |

External |

Lv2 |

|

3 |

仲裁盘 |

ASM |

2G |

+CRS |

External |

Lv3 |

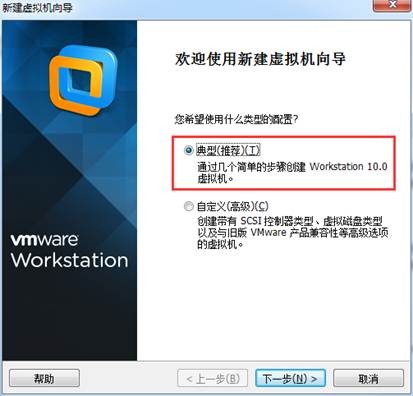

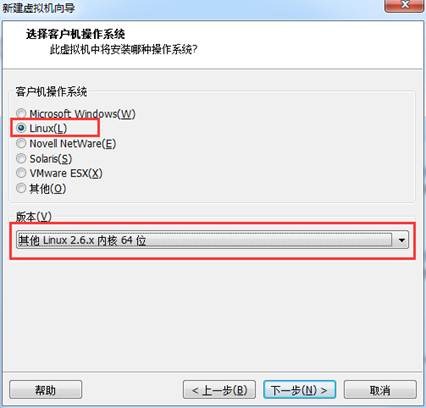

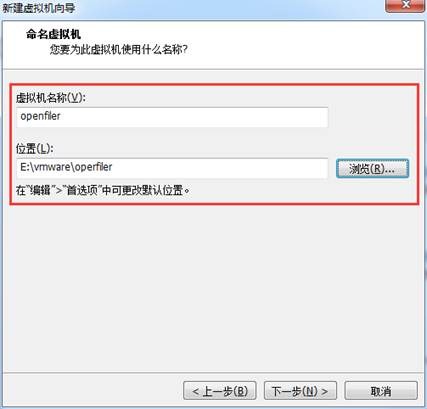

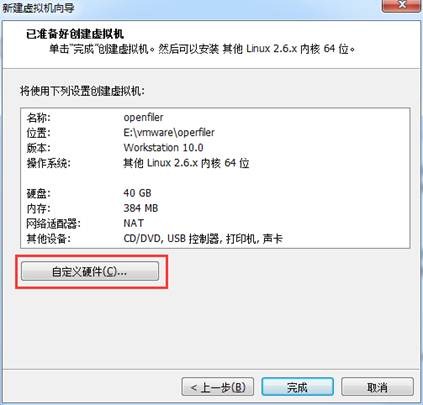

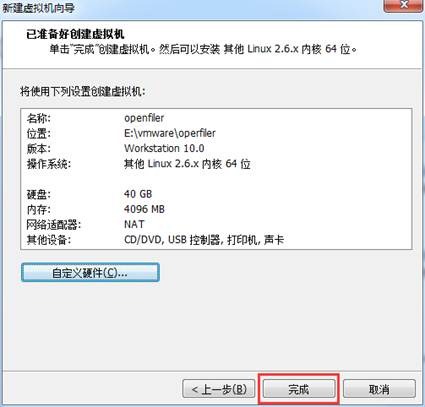

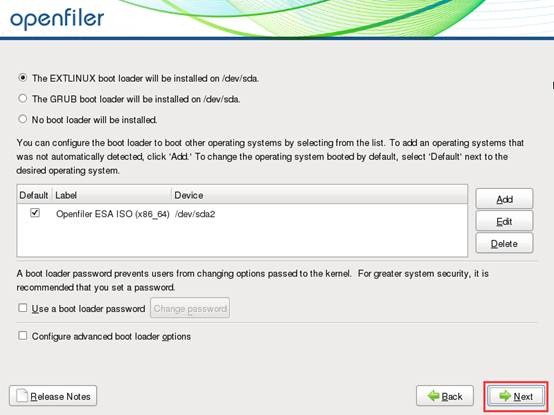

在openfiler官方网站http://www.openfiler.com/community/download 下载安装镜像,然后新建虚拟机。

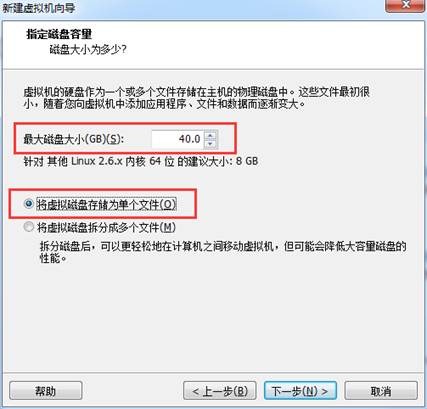

分配40G的硬盘。

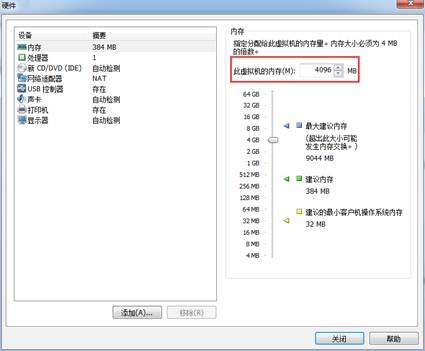

分配内存,尽可能大一些。

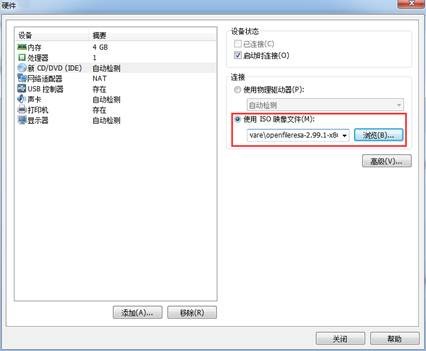

为光驱指定下载的镜像文件。

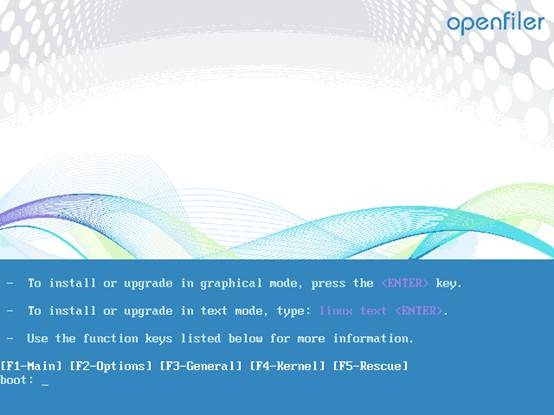

开启虚拟机。

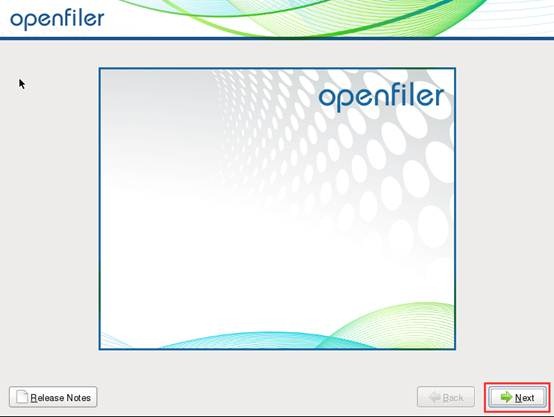

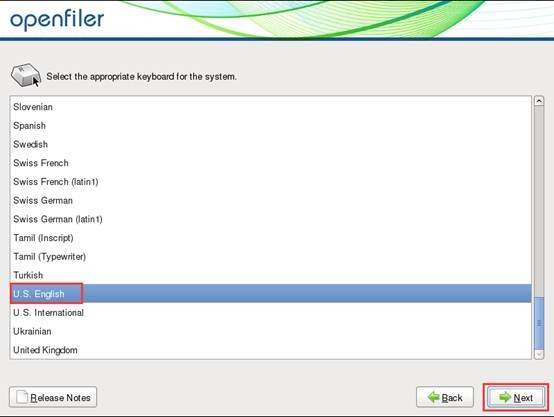

开始安装。

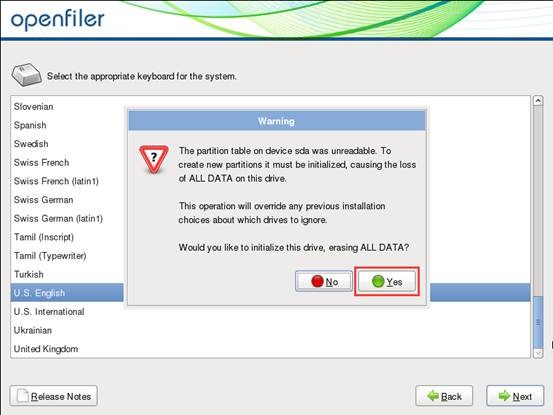

提示分配给openfiler的磁盘将会被初始化,进行确认。

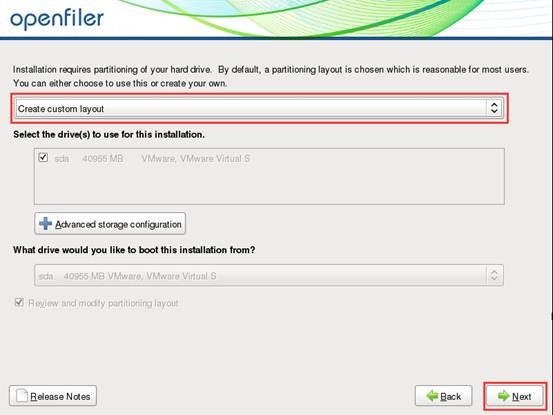

选择自定义分区。

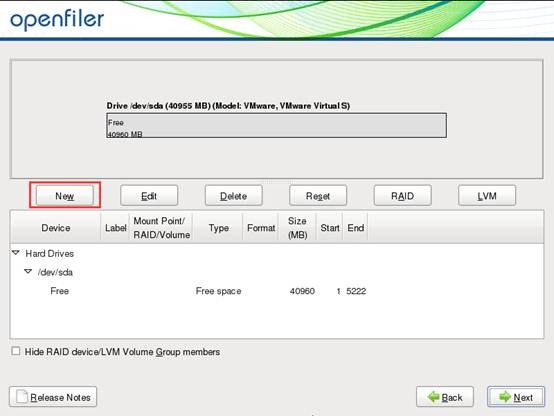

新建分区。

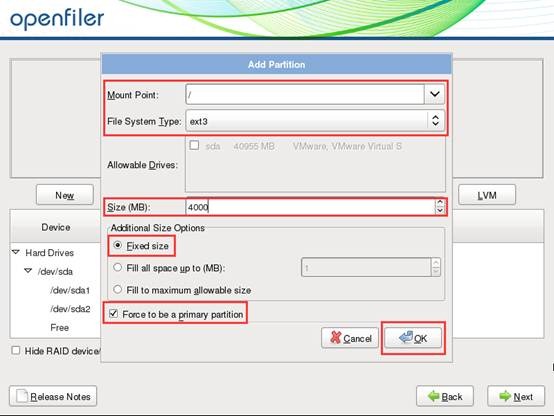

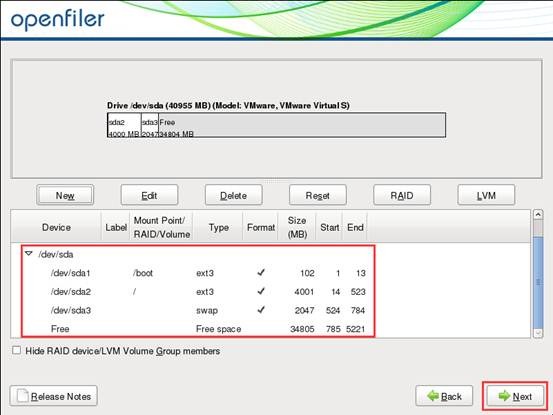

创建/boot分区,一般100M。

创建swap分区,2G足够了。

创建/(根)分区,用于安装openfiler,4G足够了。

创建后还剩余30多G,用于创建逻辑卷。

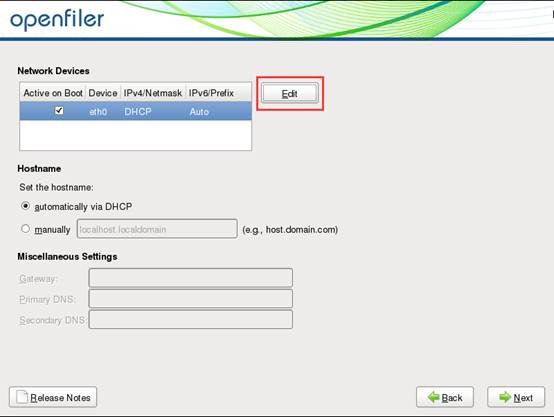

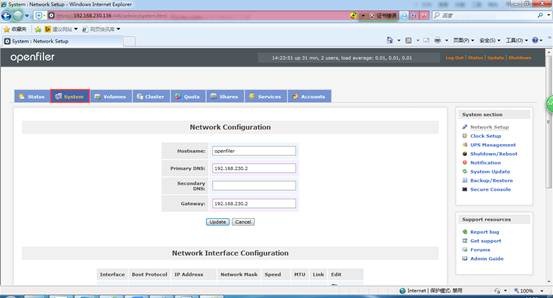

配置网络。

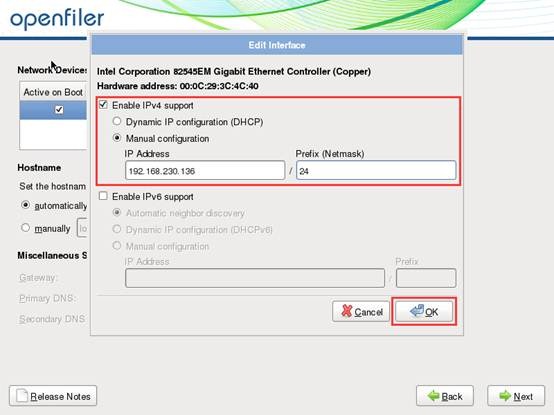

手动指定IP地址。

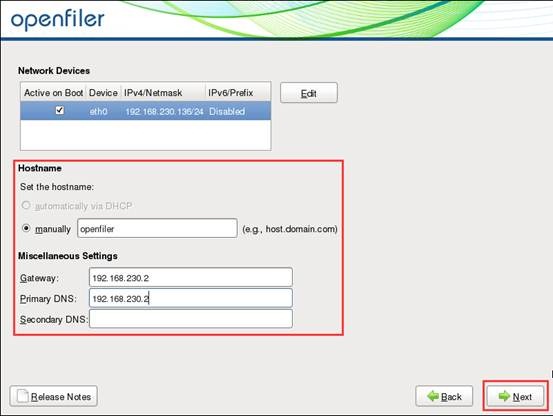

设置主机名、网关和DNS。

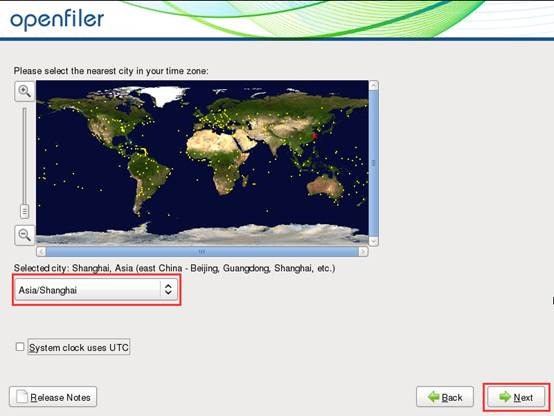

指定时区。

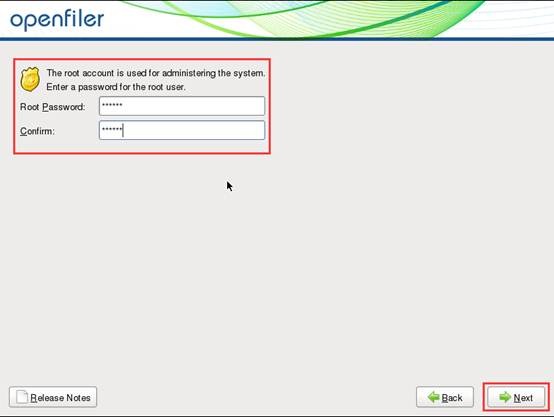

设置root密码。

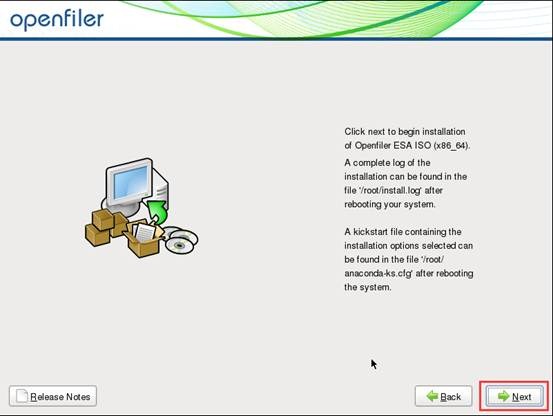

开始安装。

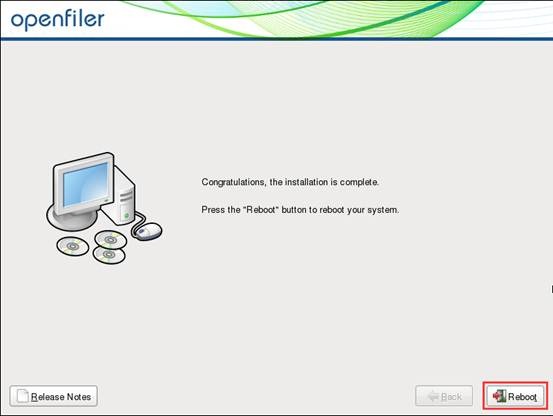

安装完成后重启。

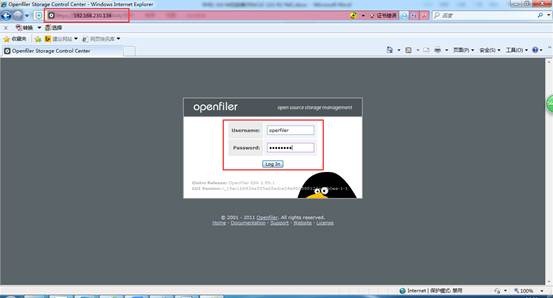

重启完成后,提示管理地址为https://192.168.230.136:446/。

使用浏览器访问https://192.168.230.136:446/,输入Openfiler的默认用户名为openfiler,密码为password。

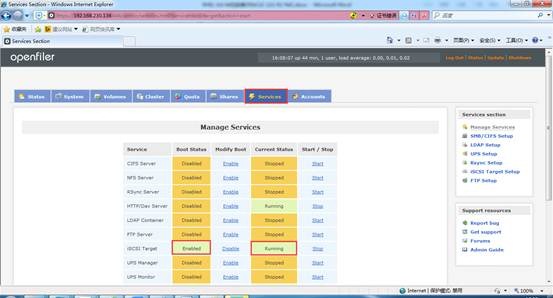

在安装完Openfiler之后,iSCSI服务默认是停止的,需要启动该服务。进入“Services”界面,在“iSCSI Target”后面单击“Enable”,启动该服务并设置在开机时自动启动。

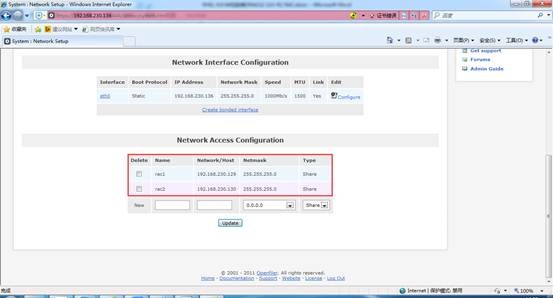

设置可以访问openfiler的主机,

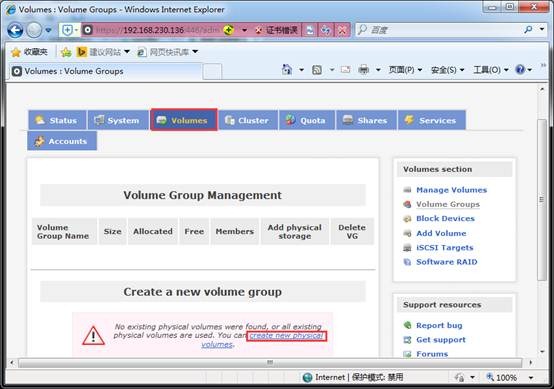

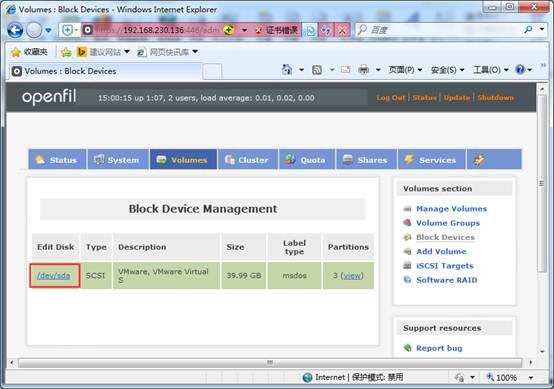

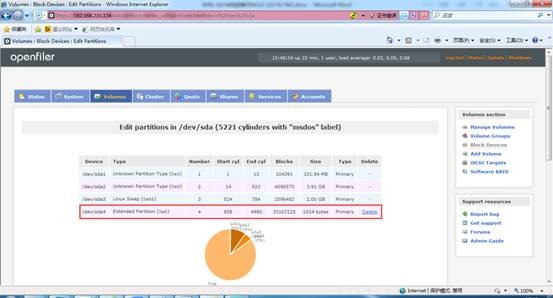

创建物理卷。

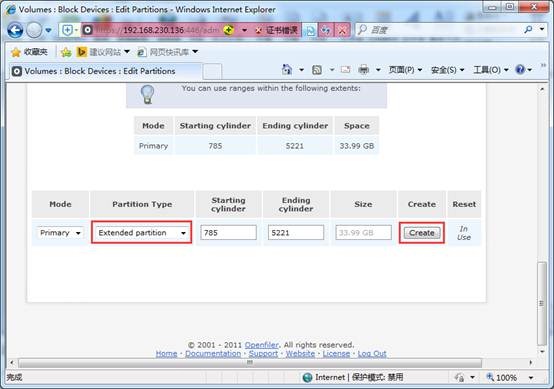

先创建一个扩展分区。

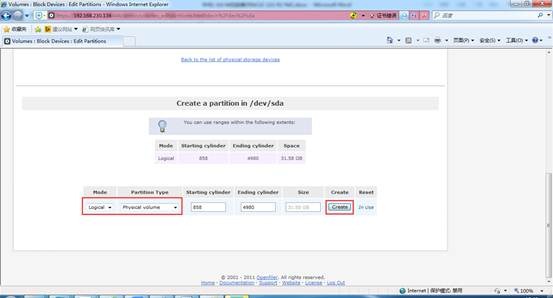

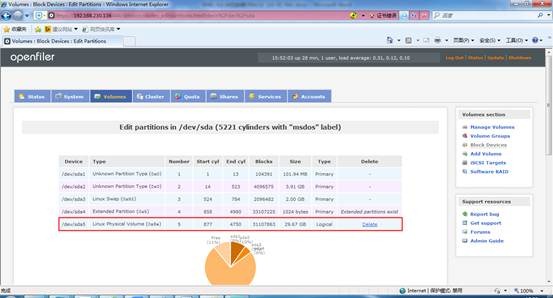

再在扩展分区下创建物理卷。

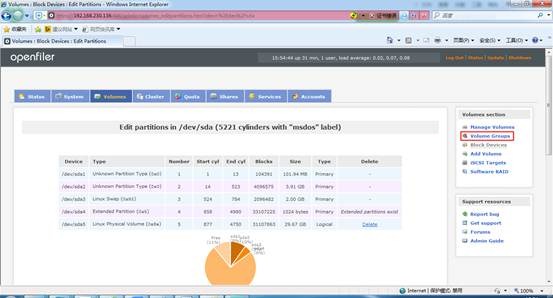

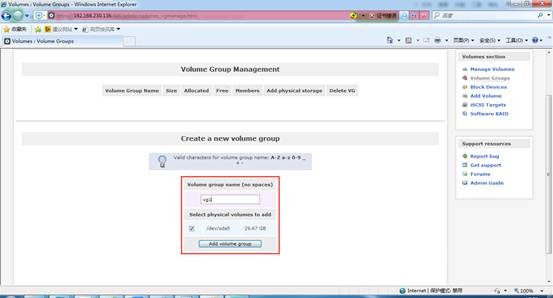

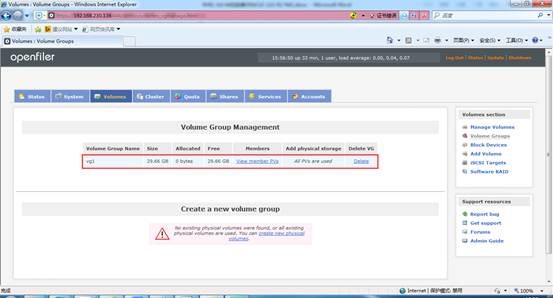

创建卷组。

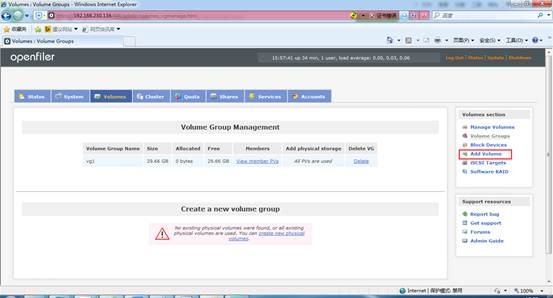

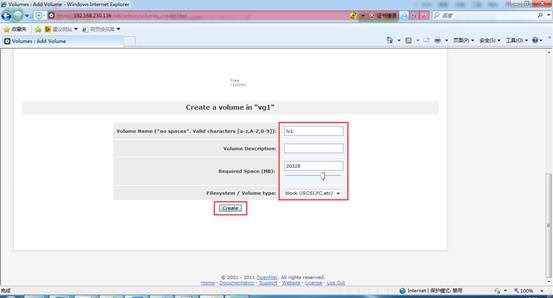

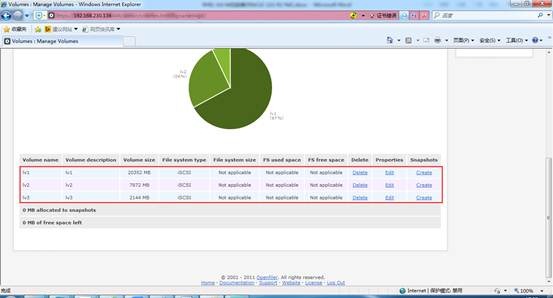

创建逻辑卷。

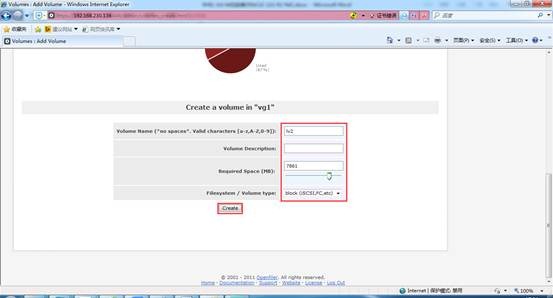

创建逻辑卷1,分配20G。

创建逻辑卷2,分配8G。

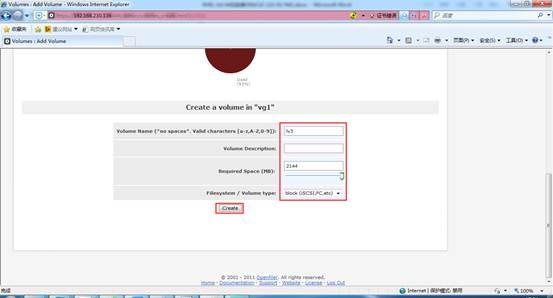

创建逻辑卷3,分配2G。

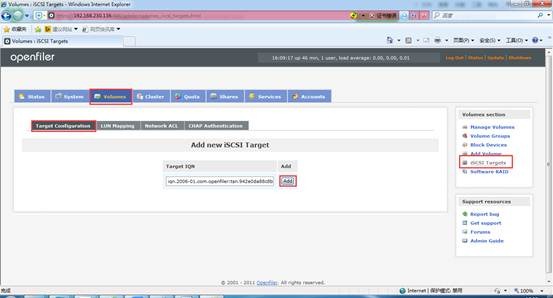

配置iSCSI Target。

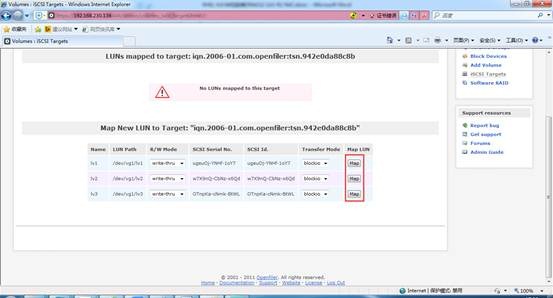

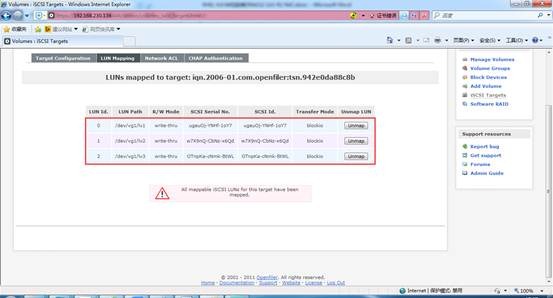

将三个逻辑卷映射给iSCSI Target。

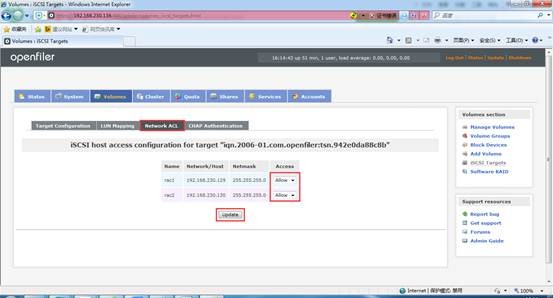

允许可访问openfiler的主机使用该iSCSI Target。

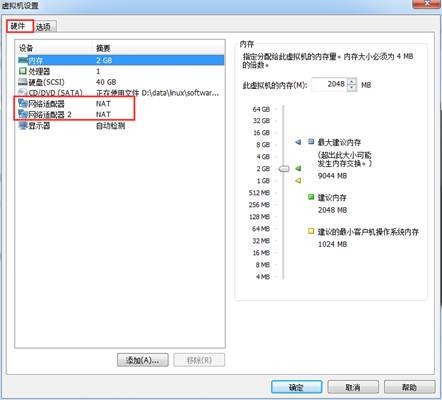

分别创建2个虚拟机,具体创建过程与创建openfiler虚拟机类似(在选择客户机操作系统的时候选择Red Hat Enterprise Linux 6 64位),也可以先创建一个模版,再进行克隆,可以参考http://blog.itpub.net/28536251/viewspace-1455365/。此处不再重复,创建结果如下:

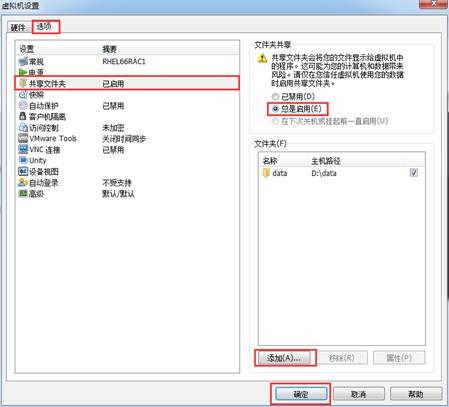

建议启用并添加共享文件夹,这样就不需要将oracle安装文件上传到linux系统上面去了。

分别安装2个虚拟机,具体安装过程与安装openfiler虚拟机类似,如果是通过模版进行的克隆,需要修改网络配置,具体可以参考http://blog.itpub.net/28536251/viewspace-1444892/。

安装完成后,建议安装VMware Tools,以提高与VMware的兼容性。

为简化部署,需要对安装完成的虚拟机进行一些设置。

(1)关闭两台虚拟机的防火墙

[root@bogon ~]# /etc/init.d/iptables stop

[root@bogon ~]# chkconfig iptables off

(2)禁用两台虚拟机的selinux,修改SELINUX=enforcing为SELINUX=disabled。

[root@bogon ~]# vim /etc/selinux/config

[root@bogon ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

(3)修改主机名

修改节点1的主机名:

[root@bogon ~]# vim /etc/sysconfig/network

[root@bogon ~]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=rac1

修改节点2的主机名:

[root@bogon ~]# vim /etc/sysconfig/network

[root@bogon ~]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=rac2

(4)重启虚拟机后进行检查

[root@rac1 ~]# /etc/init.d/iptables status

iptables: Firewall is not running.

[root@rac1 ~]# getenforce

Disabled

[root@rac1 ~]# hostname

rac1

在节点1安装配置局域网YUM,参考http://blog.itpub.net/28536251/viewspace-1454136/。也可以使用以下脚本进行创建,请根据实际情况进行修改。

[root@rac1 ~]# cat local_yum.sh

#!/bin/bash

mount /dev/cdrom /media/

cd /media/Packages/

rpm -ivh vsftpd*

chkconfig vsftpd on

cd /media/

cp -rv * /var/ftp/pub/

cd /var/ftp/pub/

rm -rf *.html

rm -rf repodata/TRANS.TBL

cd /media/Packages/

rpm -ivh deltarpm*

rpm -ivh python-deltarpm*

rpm -ivh createrepo*

createrepo -g /var/ftp/pub/repodata/*-comps-rhel6-Server.xml /var/ftp/pub/

/etc/init.d/vsftpd restart

/etc/init.d/iptables stop

cd /etc/yum.repos.d/

mkdir bak

mv * bak/

touch rhel66.repo

cat << EOF > rhel66.repo

#############################

[rhel6]

name=rhel6

baseurl=ftp://192.168.230.129/pub/

enabled=1

gpgcheck=0

#############################

EOF

yum makecache

在节点2进行YUM客户端配置,也可以直接运行下面的脚本,请根据实际情况进行修改。

[root@rac2 ~]# cat client_yum.sh

#!/bin/bash

mount /dev/cdrom /media/

cd /media/Packages/

rpm -ivh vsftpd*

chkconfig vsftpd on

/etc/init.d/vsftpd restart

/etc/init.d/iptables stop

cd /etc/yum.repos.d/

mkdir bak

mv * bak/

touch rhel66.repo

cat << EOF > rhel66.repo

#############################

[rhel6]

name=rhel6

baseurl=ftp://192.168.230.129/pub/

enabled=1

gpgcheck=0

#############################

EOF

yum makecache

(1)安装软件包

[root@rac1 ~]# yum install bind bind-chroot caching-nameserver

(2)修改配置文件,将localhost和127.0.0.1修改为any。

[root@rac1 ~]# cp /etc/named.conf /etc/named.conf.bak

[root@rac1 ~]# vim /etc/named.conf

[root@rac1 ~]# cat /etc/named.conf

//

// named.conf

//

// Provided by Red Hat bind package to configure the ISC BIND named(8) DNS

// server as a caching only nameserver (as a localhost DNS resolver only).

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

options {

listen-on port 53 { any; };

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

allow-query { any; };

recursion yes;

dnssec-enable yes;

dnssec-validation yes;

dnssec-lookaside auto;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.iscdlv.key";

managed-keys-directory "/var/named/dynamic";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

(3)配置正反向解析zone文件解析scanip,在named.rfc1912.zones末尾加上

zone "rac-scan" IN {

type master;

file "rac-scan.zone";

allow-update { none; };

};

zone "230.168.192.in-addr.arpa." IN {

type master;

file "230.168.192.in-addr.arpa";

allow-update { none; };

};

[root@rac1 ~]# cp /etc/named.rfc1912.zones /etc/named.rfc1912.zones.bak

[root@rac1 ~]# vim /etc/named.rfc1912.zones

[root@rac1 ~]# tail -11 /etc/named.rfc1912.zones

zone "rac-scan" IN {

type master;

file "rac-scan.zone";

allow-update { none; };

};

zone "230.168.192.in-addr.arpa." IN {

type master;

file "230.168.192.in-addr.arpa";

allow-update { none; };

};

将其余内容注释掉。

(4)配置正,反向解析数据库文件,在反向解析文件中加入150 IN PTR rac-scan.

[root@rac1 ~]# cd /var/named/

[root@rac1 named]# cp -p named.localhost 230.168.192.in-addr.arpa

[root@rac1 named]# vim 230.168.192.in-addr.arpa

[root@rac1 named]# tail -1 230.168.192.in-addr.arpa

150 IN PTR rac-scan.

在正向解析文件中加入rac-scan IN A 192.168.230.150

[root@rac1 ~]# cd /var/named/

[root@rac1 named]# cp -p named.localhost rac-scan.zone

[root@rac1 named]# vim rac-scan.zone

[root@rac1 named]# cat rac-scan.zone

$TTL 86400

@ IN SOA localhost root (

42 ; serial (d. adams)

3H ; refresh

15M ; retry

1W ; expiry

1D ) ; minimum

IN NS localhost

localhost IN A 127.0.0.1

rac-scan IN A 192.168.230.150

将以上的两个文件及named.ca拷贝到/var/named/chroot/var/named/目录下。

[root@rac1 named]# cp -a rac-scan.zone chroot/var/named/

[root@rac1 named]# cp -a 230.168.192.in-addr.arpa chroot/var/named/

[root@rac1 named]# cp -a named.ca chroot/var/named/

[root@rac1 named]# ll /var/named/chroot/var/named/

total 12

-rw-r----- 1 root named 183 Jul 11 17:07 230.168.192.in-addr.arpa

-rw-r----- 1 root named 2075 Apr 23 2014 named.ca

-rw-r--r-- 1 root named 524 Jul 11 17:07 rac-scan.zone

(5)检查文件是否配置正确

[root@rac1 named]# named-checkzone rac-scan rac-scan.zone

zone rac-scan/IN: loaded serial 42

OK

[root@rac1 named]# named-checkzone rac-scan 230.168.192.in-addr.arpa

zone rac-scan/IN: loaded serial 0

OK

(6)重启DNS服务

[root@rac1 named]# /etc/init.d/named restart

Stopping named: . [ OK ]

Starting named: [ OK ]

(1)网卡eth0

[root@rac1 ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

[root@rac1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

HWADDR=00:0c:29:17:b8:04

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=192.168.230.129

NETMASK=255.255.255.0

GATEWAY=192.168.230.2

ARPCHECK=no

(2)网卡eth1

[root@rac1 ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth1

[root@rac1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

HWADDR=00:0C:29:17:B8:0E

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=172.16.0.11

NETMASK=255.255.255.0

ARPCHECK=no

(3)地址映射

[root@rac1 ~]# vim /etc/hosts

[root@rac1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#Public

192.168.230.129 rac1

192.168.230.130 rac2

#Virtual

192.168.230.139 rac1-vip

192.168.230.140 rac2-vip

#Private

172.16.0.11 rac1-priv

172.16.0.12 rac2-priv

#SCAN

192.168.230.150 rac-scan

(4)配置DNS

[root@rac1 ~]# cat /etc/resolv.conf

# Generated by NetworkManager

search localdomain

nameserver 192.168.230.129

(5)重启网络

[root@rac1 ~]# /etc/init.d/network restart

Shutting down interface eth0: [ OK ]

Shutting down loopback interface: [ OK ]

Bringing up loopback interface: [ OK ]

Bringing up interface eth0: [ OK ]

Bringing up interface eth1: [ OK ]

(1)网卡eth0

[root@rac2 ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

[root@rac2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

HWADDR=00:0c:29:23:53:1c

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=192.168.230.130

NETMASK=255.255.255.0

GATEWAY=192.168.230.2

ARPCHECK=NO

(2)网卡eth1

[root@rac1 ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth1

[root@rac1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

HWADDR=00:0C:29:23:53:26

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=172.16.0.12

NETMASK=255.255.255.0

ARPCHECK=no

(3)地址映射

[root@rac2 ~]# vim /etc/hosts

[root@rac2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#Public

192.168.230.129 rac1

192.168.230.130 rac2

#Virtual

192.168.230.139 rac1-vip

192.168.230.140 rac2-vip

#Private

172.16.0.11 rac1-priv

172.16.0.12 rac2-priv

#SCAN

192.168.230.150 rac-scan

(4)配置DNS

[root@rac2 ~]# cat /etc/resolv.conf

# Generated by NetworkManager

search localdomain

nameserver 192.168.230.129

(5)重启网络

[root@rac2 ~]# /etc/init.d/network restart

Shutting down interface eth0: [ OK ]

Shutting down loopback interface: [ OK ]

Bringing up loopback interface: [ OK ]

Bringing up interface eth0: [ OK ]

Bringing up interface eth1: [ OK ]

使用以下脚本进行创建,请根据实际情况进行修改,分别在两个节点运行。

[root@rac1 ~]# vim oracle_ugd.sh

[root@rac1 ~]# cat oracle_ugd.sh

#!/bin/bash

groupadd -g 5000 asmadmin

groupadd -g 5001 asmdba

groupadd -g 5002 asmoper

groupadd -g 6000 oinstall

groupadd -g 6001 dba

groupadd -g 6002 oper

useradd -m -u 1100 -g oinstall -G asmadmin,asmdba,asmoper,dba -d /home/grid -s /bin/bash -c "Grid Infrastructure Owner" grid

useradd -m -u 1101 -g oinstall -G dba,oper,asmdba -d /home/oracle -s /bin/bash -c "Oracle Software Owner" oracle

mkdir -p /u01/app/grid

mkdir -p /u01/app/11.2.0/grid

chown -R grid:oinstall /u01

mkdir -p /u01/app/oracle

chown oracle:oinstall /u01/app/oracle

chmod -R 775 /u01

echo "123456" | passwd --stdin grid

echo "123456" | passwd --stdin oracle

(1)grid用户环境变量

切换到grid用户,在.bash_profile文件中增加以下内容。

[grid@rac1 ~]$ vim .bash_profile

[grid@rac1 ~]$ source .bash_profile

[grid@rac1 ~]$ tail -6 .bash_profile

#Grid Settings

ORACLE_BASE=/u01/app/grid

ORACLE_HOME=/u01/app/11.2.0/grid

ORACLE_SID=+ASM1

PATH=$ORACLE_HOME/bin:$PATH

export ORACLE_BASE ORACLE_HOME ORACLE_SID PATH

(2)oracle用户环境变量设置

切换到oracle用户,在.bash_profile文件中增加以下内容。

[oracle@rac1 ~]$ vim .bash_profile

[oracle@rac1 ~]$ source .bash_profile

[oracle@rac1 ~]$ tail -13 .bash_profile

# Oracle Settings

TMP=/tmp; export TMP

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=rac1; export ORACLE_HOSTNAME

ORACLE_UNQNAME=stone; export ORACLE_UNQNAME

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/11.2.0/dbhome_1; export ORACLE_HOME

ORACLE_SID= stone1; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

BASE_PATH=/usr/sbin:$PATH; export BASE_PATH

PATH=$ORACLE_HOME/bin:$BASE_PATH; export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib; export CLASSPATH

(1)grid用户环境变量

切换到grid用户,在.bash_profile文件中增加以下内容。

[grid@rac2 ~]$ vim .bash_profile

[grid @rac2 ~]$ source .bash_profile

[grid@rac2 ~]$ tail -6 .bash_profile

#Grid Settings

ORACLE_BASE=/u01/app/grid

ORACLE_HOME=/u01/app/11.2.0/grid

ORACLE_SID=+ASM2

PATH=$ORACLE_HOME/bin:$PATH

export ORACLE_BASE ORACLE_HOME ORACLE_SID PATH

(2)oracle用户环境变量设置

切换到oracle用户,在.bash_profile文件中增加以下内容。

[oracle@rac2 ~]$ vim .bash_profile

[oracle@rac2 ~]$ source .bash_profile

[oracle@rac2 ~]$ tail -13 .bash_profile

# Oracle Settings

TMP=/tmp; export TMP

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=rac2; export ORACLE_HOSTNAME

ORACLE_UNQNAME=stone; export ORACLE_UNQNAME

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/11.2.0/dbhome_1; export ORACLE_HOME

ORACLE_SID= stone2; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

BASE_PATH=/usr/sbin:$PATH; export BASE_PATH

PATH=$ORACLE_HOME/bin:$BASE_PATH; export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib; export CLASSPATH

分别在2个节点上执行以下命令,发现存储。

[root@rac1 ~]# yum -y install iscsi-initiator-utils

[root@rac1 ~]# chkconfig iscsid on

[root@rac1 ~]# chkconfig iscsi on

[root@rac1 ~]# /etc/init.d/iscsid start

[root@rac1 ~]# /etc/init.d/iscsi start

[root@rac1 ~]# iscsiadm -m discovery -t sendtargets -p 192.168.230.136

Starting iscsid: [ OK ]

iscsiadm: No portals found

提示iscsiadm: No portals found,在openfiler主机上修改/etc/initiators.deny文件,注释掉里面的内容。

[root@openfiler ~]# cat /etc/initiators.deny

# PLEASE DO NOT MODIFY THIS CONFIGURATION FILE!

# This configuration file was autogenerated

# by Openfiler. Any manual changes will be overwritten

# Generated at: Fri Jul 10 17:24:59 CST 2015

#iqn.2006-01.com.openfiler:tsn.942e0da88c8b ALL

# End of Openfiler configuration

[root@rac1 ~]# iscsiadm -m discovery -t sendtargets -p 192.168.230.136

192.168.230.136:3260,1 iqn.2006-01.com.openfiler:tsn.942e0da88c8b

[root@rac1 ~]# iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.942e0da88c8b -p 192.168.230.136 -l

Logging in to [iface: default, target: iqn.2006-01.com.openfiler:tsn.942e0da88c8b, portal: 192.168.230.136,3260] (multiple)

Login to [iface: default, target: iqn.2006-01.com.openfiler:tsn.942e0da88c8b, portal: 192.168.230.136,3260] successful.

[root@rac1 ~]# iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.942e0da88c8b -p 192.168.230.136 --op update -n node.startup -v automatic

[root@rac1 ~]# cat /proc/partitions

major minor #blocks name

8 0 41943040 sda

8 1 204800 sda1

8 2 4096000 sda2

8 3 37641216 sda3

8 16 20840448 sdb

8 32 8060928 sdc

8 48 2195456 sdd

[root@rac2 ~]# cat /proc/partitions

major minor #blocks name

8 0 41943040 sda

8 1 204800 sda1

8 2 4096000 sda2

8 3 37641216 sda3

8 16 20840448 sdb

8 32 8060928 sdc

8 48 2195456 sdd

从上面结果可以看到两个节点都已经发现了存储上面的三个逻辑卷。

[root@rac1 ~]# vi /etc/scsi_id.config

[root@rac1 ~]# cat /etc/scsi_id.config

options=--whitelisted --replace-whitespace

执行以下命令将逻辑卷信息写入配置文件99-oracle-asmdevices.rules。

for i in b c d;

do

echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"`/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules

done

[root@rac1 ~]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="14f504e46494c4552756765754f6a2d594e48662d316f5937", NAME="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="14f504e46494c4552773758396e512d43624e7a2d78365164", NAME="asm-diskc", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="14f504e46494c45524f546e704b612d634e6d6b2d4274574c", NAME="asm-diskd", OWNER="grid", GROUP="asmadmin", MODE="0660"

[root@rac1 ~]# start_udev

Starting udev: udevd[3303]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

[root@rac1 ~]# ll /dev/asm-disk*

brw-rw---- 1 grid asmadmin 8, 16 Jul 10 19:37 /dev/asm-diskb

brw-rw---- 1 grid asmadmin 8, 32 Jul 10 19:37 /dev/asm-diskc

brw-rw---- 1 grid asmadmin 8, 48 Jul 10 19:37 /dev/asm-diskd

将节点1的99-oracle-asmdevices.rules复制到节点2。

[root@rac1 ~]# scp /etc/udev/rules.d/99-oracle-asmdevices.rules root@192.168.230.130:/etc/udev/rules.d/

root@192.168.230.130's password:

99-oracle-asmdevices.rules 100% 696 0.7KB/s 00:00

节点2重启udev。

[root@rac2 ~]# start_udev

Starting udev: udevd[3992]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

[root@rac2 ~]# ll /dev/asm-disk*

brw-rw---- 1 grid asmadmin 8, 16 Jul 11 10:53 /dev/asm-diskb

brw-rw---- 1 grid asmadmin 8, 32 Jul 11 10:53 /dev/asm-diskc

brw-rw---- 1 grid asmadmin 8, 48 Jul 11 10:53 /dev/asm-diskd

分别在两个节点上面使用YUM安装依赖包。

[root@rac1 ~]#yum install gcc compat-libstdc++-33 elfutils-libelf-devel gcc-c++ libaio-devel libstdc++-devel pdksh libcap.so.1 –y

[root@rac1 ~]# rpm -ivh /mnt/hgfs/data/oracle/software/pdksh-5.2.14-37.el5_8.1.x86_64.rpm

warning: /mnt/hgfs/data/oracle/software/pdksh-5.2.14-37.el5_8.1.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID e8562897: NOKEY

Preparing... ########################################### [100%]

1:pdksh ########################################### [100%]

[root@rac1 ~]# cd /lib64

[root@rac1 lib64]# ln -s libcap.so.2.16 libcap.so.1

[root@rac2 ~]#yum install gcc compat-libstdc++-33 elfutils-libelf-devel gcc-c++ libaio-devel libstdc++-devel pdksh libcap.so.1 –y

[root@rac2 ~]# rpm -ivh /mnt/hgfs/data/oracle/software/pdksh-5.2.14-37.el5_8.1.x86_64.rpm

warning: /mnt/hgfs/data/oracle/software/pdksh-5.2.14-37.el5_8.1.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID e8562897: NOKEY

Preparing... ########################################### [100%]

1:pdksh ########################################### [100%]

[root@rac2 ~]# cd /lib64

[root@rac2 lib64]# ln -s libcap.so.2.16 libcap.so.1

分别在2个节点禁用NTP服务。

[root@rac1 ~]# /etc/init.d/ntpd stop

Shutting down ntpd: [FAILED]

[root@rac1 ~]# chkconfig ntpd off

[root@rac1 ~]# mv /etc/ntp.conf /etc/ntp.conf.bak

[root@rac2 ~]# /etc/init.d/ntpd stop

Shutting down ntpd: [FAILED]

[root@rac2 ~]# chkconfig ntpd off

[root@rac2 ~]# mv /etc/ntp.conf /etc/ntp.conf.bak

分别在2个节点解压GI安装包,安装cvuqdisk。

节点1:

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ unzip /mnt/hgfs/data/oracle/software/11204/p13390677_112040_Linux-x86-64_3of7.zip

切换到root用户进行安装。

[root@rac1 ~]# cd /home/grid/grid/rpm/

[root@rac1 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk ########################################### [100%]

节点2:

[root@rac2 ~]# su - grid

[grid@rac2 ~]$ unzip /mnt/hgfs/data/oracle/software/11204/p13390677_112040_Linux-x86-64_3of7.zip

切换到root用户进行安装。

[root@rac2 ~]# cd /home/grid/grid/rpm/

[root@rac2 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk ########################################### [100%]

此处操作是在GI安装完成后进行的。因为11G R2版本可以自动配置SSH等价(就是使用ssh访问对方不需要输入密码),比较方便。

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ cd grid/

[grid@rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -fixup -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "rac1"

Destination Node Reachable?

------------------------------------ ------------------------

rac2 yes

rac1 yes

Result: Node reachability check passed from node "rac1"

Checking user equivalence...

Check: User equivalence for user "grid"

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Verification of the hosts config file successful

Interface information for node "rac2"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

eth0 192.168.230.130 192.168.230.0 0.0.0.0 192.168.230.2 00:0C:29:23:53:1C 1500

eth0 192.168.230.140 192.168.230.0 0.0.0.0 192.168.230.2 00:0C:29:23:53:1C 1500

eth1 172.16.0.12 172.16.0.0 0.0.0.0 192.168.230.2 00:0C:29:23:53:26 1500

eth1 169.254.218.177 169.254.0.0 0.0.0.0 192.168.230.2 00:0C:29:23:53:26 1500

Interface information for node "rac1"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

eth0 192.168.230.129 192.168.230.0 0.0.0.0 192.168.230.2 00:0C:29:17:B8:04 1500

eth0 192.168.230.139 192.168.230.0 0.0.0.0 192.168.230.2 00:0C:29:17:B8:04 1500

eth0 192.168.230.150 192.168.230.0 0.0.0.0 192.168.230.2 00:0C:29:17:B8:04 1500

eth1 172.16.0.11 172.16.0.0 0.0.0.0 192.168.230.2 00:0C:29:17:B8:0E 1500

eth1 169.254.44.97 169.254.0.0 0.0.0.0 192.168.230.2 00:0C:29:17:B8:0E 1500

Check: Node connectivity for interface "eth0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac2[192.168.230.130] rac2[192.168.230.140] yes

rac2[192.168.230.130] rac1[192.168.230.129] yes

rac2[192.168.230.130] rac1[192.168.230.139] yes

rac2[192.168.230.130] rac1[192.168.230.150] yes

rac2[192.168.230.140] rac1[192.168.230.129] yes

rac2[192.168.230.140] rac1[192.168.230.139] yes

rac2[192.168.230.140] rac1[192.168.230.150] yes

rac1[192.168.230.129] rac1[192.168.230.139] yes

rac1[192.168.230.129] rac1[192.168.230.150] yes

rac1[192.168.230.139] rac1[192.168.230.150] yes

Result: Node connectivity passed for interface "eth0"

Check: TCP connectivity of subnet "192.168.230.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1:192.168.230.129 rac2:192.168.230.130 passed

rac1:192.168.230.129 rac2:192.168.230.140 passed

rac1:192.168.230.129 rac1:192.168.230.139 passed

rac1:192.168.230.129 rac1:192.168.230.150 passed

Result: TCP connectivity check passed for subnet "192.168.230.0"

Check: Node connectivity for interface "eth1"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac2[172.16.0.12] rac1[172.16.0.11] yes

Result: Node connectivity passed for interface "eth1"

Check: TCP connectivity of subnet "172.16.0.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1:172.16.0.11 rac2:172.16.0.12 passed

Result: TCP connectivity check passed for subnet "172.16.0.0"

Checking subnet mask consistency...

Subnet mask consistency check passed for subnet "192.168.230.0".

Subnet mask consistency check passed for subnet "172.16.0.0".

Subnet mask consistency check passed.

Result: Node connectivity check passed

Checking multicast communication...

Checking subnet "192.168.230.0" for multicast communication with multicast group "230.0.1.0"...

Check of subnet "192.168.230.0" for multicast communication with multicast group "230.0.1.0" passed.

Checking subnet "172.16.0.0" for multicast communication with multicast group "230.0.1.0"...

Check of subnet "172.16.0.0" for multicast communication with multicast group "230.0.1.0" passed.

Check of multicast communication passed.

Checking ASMLib configuration.

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: Check for ASMLib configuration passed.

Check: Total memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 1.9517GB (2046528.0KB) 1.5GB (1572864.0KB) passed

rac1 1.9517GB (2046528.0KB) 1.5GB (1572864.0KB) passed

Result: Total memory check passed

Check: Available memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 1.136GB (1191148.0KB) 50MB (51200.0KB) passed

rac1 787.3633MB (806260.0KB) 50MB (51200.0KB) passed

Result: Available memory check passed

Check: Swap space

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 3.9062GB (4095996.0KB) 2.9276GB (3069792.0KB) passed

rac1 3.9062GB (4095996.0KB) 2.9276GB (3069792.0KB) passed

Result: Swap space check passed

Check: Free disk space for "rac2:/u01/app/11.2.0/grid,rac2:/tmp"

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/u01/app/11.2.0/grid rac2 / 25.3281GB 7.5GB passed

/tmp rac2 / 25.3281GB 7.5GB passed

Result: Free disk space check passed for "rac2:/u01/app/11.2.0/grid,rac2:/tmp"

Check: Free disk space for "rac1:/u01/app/11.2.0/grid,rac1:/tmp"

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/u01/app/11.2.0/grid rac1 / 22.2506GB 7.5GB passed

/tmp rac1 / 22.2506GB 7.5GB passed

Result: Free disk space check passed for "rac1:/u01/app/11.2.0/grid,rac1:/tmp"

Check: User existence for "grid"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists(1100)

rac1 passed exists(1100)

Checking for multiple users with UID value 1100

Result: Check for multiple users with UID value 1100 passed

Result: User existence check passed for "grid"

Check: Group existence for "oinstall"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists

rac1 passed exists

Result: Group existence check passed for "oinstall"

Check: Group existence for "dba"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists

rac1 passed exists

Result: Group existence check passed for "dba"

Check: Membership of user "grid" in group "oinstall" [as Primary]

Node Name User Exists Group Exists User in Group Primary Status

---------------- ------------ ------------ ------------ ------------ ------------

rac2 yes yes yes yes passed

rac1 yes yes yes yes passed

Result: Membership check for user "grid" in group "oinstall" [as Primary] passed

Check: Membership of user "grid" in group "dba"

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac2 yes yes no failed

rac1 yes yes no failed

Result: Membership check for user "grid" in group "dba" failed

Check: Run level

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

rac2 5 3,5 passed

rac1 5 3,5 passed

Result: Run level check passed

Check: Hard limits for "maximum open file descriptors"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 hard 65536 65536 passed

rac1 hard 65536 65536 passed

Result: Hard limits check passed for "maximum open file descriptors"

Check: Soft limits for "maximum open file descriptors"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 soft 1024 1024 passed

rac1 soft 65536 1024 passed

Result: Soft limits check passed for "maximum open file descriptors"

Check: Hard limits for "maximum user processes"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 hard 16384 16384 passed

rac1 hard 16384 16384 passed

Result: Hard limits check passed for "maximum user processes"

Check: Soft limits for "maximum user processes"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 soft 2047 2047 passed

rac1 soft 2047 2047 passed

Result: Soft limits check passed for "maximum user processes"

Check: System architecture

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 x86_64 x86_64 passed

rac1 x86_64 x86_64 passed

Result: System architecture check passed

Check: Kernel version

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 2.6.32-504.el6.x86_64 2.6.9 passed

rac1 2.6.32-504.el6.x86_64 2.6.9 passed

Result: Kernel version check passed

Check: Kernel parameter for "semmsl"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 250 250 250 passed

rac1 250 250 250 passed

Result: Kernel parameter check passed for "semmsl"

Check: Kernel parameter for "semmns"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 32000 32000 32000 passed

rac1 32000 32000 32000 passed

Result: Kernel parameter check passed for "semmns"

Check: Kernel parameter for "semopm"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 100 100 100 passed

rac1 100 100 100 passed

Result: Kernel parameter check passed for "semopm"

Check: Kernel parameter for "semmni"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 128 128 128 passed

rac1 128 128 128 passed

Result: Kernel parameter check passed for "semmni"

Check: Kernel parameter for "shmmax"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 68719476736 68719476736 1047822336 passed

rac1 68719476736 68719476736 1047822336 passed

Result: Kernel parameter check passed for "shmmax"

Check: Kernel parameter for "shmmni"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 4096 4096 4096 passed

rac1 4096 4096 4096 passed

Result: Kernel parameter check passed for "shmmni"

Check: Kernel parameter for "shmall"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 4294967296 4294967296 2097152 passed

rac1 4294967296 4294967296 2097152 passed

Result: Kernel parameter check passed for "shmall"

Check: Kernel parameter for "file-max"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 6815744 6815744 6815744 passed

rac1 6815744 6815744 6815744 passed

Result: Kernel parameter check passed for "file-max"

Check: Kernel parameter for "ip_local_port_range"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 between 9000.0 & 65500.0 between 9000.0 & 65500.0 between 9000.0 & 65500.0 passed

rac1 between 9000.0 & 65500.0 between 9000.0 & 65500.0 between 9000.0 & 65500.0 passed

Result: Kernel parameter check passed for "ip_local_port_range"

Check: Kernel parameter for "rmem_default"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 262144 262144 262144 passed

rac1 262144 262144 262144 passed

Result: Kernel parameter check passed for "rmem_default"

Check: Kernel parameter for "rmem_max"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 4194304 4194304 4194304 passed

rac1 4194304 4194304 4194304 passed

Result: Kernel parameter check passed for "rmem_max"

Check: Kernel parameter for "wmem_default"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 262144 262144 262144 passed

rac1 262144 262144 262144 passed

Result: Kernel parameter check passed for "wmem_default"

Check: Kernel parameter for "wmem_max"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 1048576 1048576 1048576 passed

rac1 1048576 1048576 1048576 passed

Result: Kernel parameter check passed for "wmem_max"

Check: Kernel parameter for "aio-max-nr"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 1048576 1048576 1048576 passed

rac1 1048576 1048576 1048576 passed

Result: Kernel parameter check passed for "aio-max-nr"

Check: Package existence for "make"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 make-3.81-20.el6 make-3.80 passed

rac1 make-3.81-20.el6 make-3.80 passed

Result: Package existence check passed for "make"

Check: Package existence for "binutils"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 binutils-2.20.51.0.2-5.42.el6 binutils-2.15.92.0.2 passed

rac1 binutils-2.20.51.0.2-5.42.el6 binutils-2.15.92.0.2 passed

Result: Package existence check passed for "binutils"

Check: Package existence for "gcc(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 gcc(x86_64)-4.4.7-11.el6 gcc(x86_64)-3.4.6 passed

rac1 gcc(x86_64)-4.4.7-11.el6 gcc(x86_64)-3.4.6 passed

Result: Package existence check passed for "gcc(x86_64)"

Check: Package existence for "libaio(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.105 passed

rac1 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.105 passed

Result: Package existence check passed for "libaio(x86_64)"

Check: Package existence for "glibc(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc(x86_64)-2.12-1.149.el6 glibc(x86_64)-2.3.4-2.41 passed

rac1 glibc(x86_64)-2.12-1.149.el6 glibc(x86_64)-2.3.4-2.41 passed

Result: Package existence check passed for "glibc(x86_64)"

Check: Package existence for "compat-libstdc++-33(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed

rac1 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed

Result: Package existence check passed for "compat-libstdc++-33(x86_64)"

Check: Package existence for "elfutils-libelf(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 elfutils-libelf(x86_64)-0.158-3.2.el6 elfutils-libelf(x86_64)-0.97 passed

rac1 elfutils-libelf(x86_64)-0.158-3.2.el6 elfutils-libelf(x86_64)-0.97 passed

Result: Package existence check passed for "elfutils-libelf(x86_64)"

Check: Package existence for "elfutils-libelf-devel"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 elfutils-libelf-devel-0.158-3.2.el6 elfutils-libelf-devel-0.97 passed

rac1 elfutils-libelf-devel-0.158-3.2.el6 elfutils-libelf-devel-0.97 passed

Result: Package existence check passed for "elfutils-libelf-devel"

Check: Package existence for "glibc-common"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc-common-2.12-1.149.el6 glibc-common-2.3.4 passed

rac1 glibc-common-2.12-1.149.el6 glibc-common-2.3.4 passed

Result: Package existence check passed for "glibc-common"

Check: Package existence for "glibc-devel(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc-devel(x86_64)-2.12-1.149.el6 glibc-devel(x86_64)-2.3.4 passed

rac1 glibc-devel(x86_64)-2.12-1.149.el6 glibc-devel(x86_64)-2.3.4 passed

Result: Package existence check passed for "glibc-devel(x86_64)"

Check: Package existence for "glibc-headers"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc-headers-2.12-1.149.el6 glibc-headers-2.3.4 passed

rac1 glibc-headers-2.12-1.149.el6 glibc-headers-2.3.4 passed

Result: Package existence check passed for "glibc-headers"

Check: Package existence for "gcc-c++(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 gcc-c++(x86_64)-4.4.7-11.el6 gcc-c++(x86_64)-3.4.6 passed

rac1 gcc-c++(x86_64)-4.4.7-11.el6 gcc-c++(x86_64)-3.4.6 passed

Result: Package existence check passed for "gcc-c++(x86_64)"

Check: Package existence for "libaio-devel(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.105 passed

rac1 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.105 passed

Result: Package existence check passed for "libaio-devel(x86_64)"

Check: Package existence for "libgcc(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libgcc(x86_64)-4.4.7-11.el6 libgcc(x86_64)-3.4.6 passed

rac1 libgcc(x86_64)-4.4.7-11.el6 libgcc(x86_64)-3.4.6 passed

Result: Package existence check passed for "libgcc(x86_64)"

Check: Package existence for "libstdc++(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libstdc++(x86_64)-4.4.7-11.el6 libstdc++(x86_64)-3.4.6 passed

rac1 libstdc++(x86_64)-4.4.7-11.el6 libstdc++(x86_64)-3.4.6 passed

Result: Package existence check passed for "libstdc++(x86_64)"

Check: Package existence for "libstdc++-devel(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libstdc++-devel(x86_64)-4.4.7-11.el6 libstdc++-devel(x86_64)-3.4.6 passed

rac1 libstdc++-devel(x86_64)-4.4.7-11.el6 libstdc++-devel(x86_64)-3.4.6 passed

Result: Package existence check passed for "libstdc++-devel(x86_64)"

Check: Package existence for "sysstat"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 sysstat-9.0.4-27.el6 sysstat-5.0.5 passed

rac1 sysstat-9.0.4-27.el6 sysstat-5.0.5 passed

Result: Package existence check passed for "sysstat"

Check: Package existence for "pdksh"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 pdksh-5.2.14-37.el5_8.1 pdksh-5.2.14 passed

rac1 pdksh-5.2.14-37.el5_8.1 pdksh-5.2.14 passed

Result: Package existence check passed for "pdksh"

Check: Package existence for "expat(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 expat(x86_64)-2.0.1-11.el6_2 expat(x86_64)-1.95.7 passed

rac1 expat(x86_64)-2.0.1-11.el6_2 expat(x86_64)-1.95.7 passed

Result: Package existence check passed for "expat(x86_64)"

Checking for multiple users with UID value 0

Result: Check for multiple users with UID value 0 passed

Check: Current group ID

Result: Current group ID check passed

Starting check for consistency of primary group of root user

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Check for consistency of root user's primary group passed

Starting Clock synchronization checks using Network Time Protocol(NTP)...

NTP Configuration file check started...

Network Time Protocol(NTP) configuration file not found on any of the nodes. Oracle Cluster Time Synchronization Service(CTSS) can be used instead of NTP for time synchronization on the cluster nodes

No NTP Daemons or Services were found to be running

Result: Clock synchronization check using Network Time Protocol(NTP) passed

Checking Core file name pattern consistency...

Core file name pattern consistency check passed.

Checking to make sure user "grid" is not in "root" group

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed does not exist

rac1 passed does not exist

Result: User "grid" is not part of "root" group. Check passed

Check default user file creation mask

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

rac2 0022 0022 passed

rac1 0022 0022 passed

Result: Default user file creation mask check passed

Checking consistency of file "/etc/resolv.conf" across nodes

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking file "/etc/resolv.conf" to make sure that only one search entry is defined

All nodes have one search entry defined in file "/etc/resolv.conf"

Checking all nodes to make sure that search entry is "localdomain" as found on node "rac2"

All nodes of the cluster have same value for 'search'

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

The DNS response time for an unreachable node is within acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across nodes

Check: Time zone consistency

Result: Time zone consistency check passed

Fixup information has been generated for following node(s):

rac2,rac1

Please run the following script on each node as "root" user to execute the fixups:

'/tmp/CVU_11.2.0.4.0_grid/runfixup.sh'

Pre-check for cluster services setup was unsuccessful on all the nodes.

在宿主机(IP:192.168.230.1)上启动Xmanager Passive。然后在虚拟机上设置DISPLAY环境变量。

[grid@rac1 ~]$ export DISPLAY=192.168.230.1:0.0

[grid@rac1 ~]$ cd grid/

[grid@rac1 grid]$ ./runInstaller

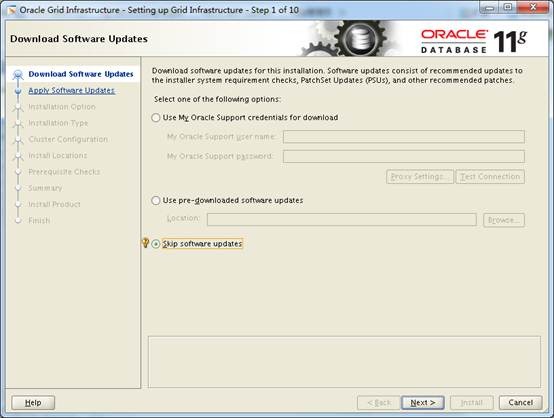

忽略更新。

选择安装集群。

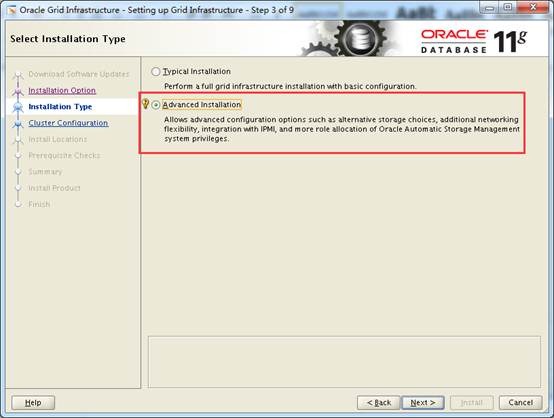

选择高级安装。

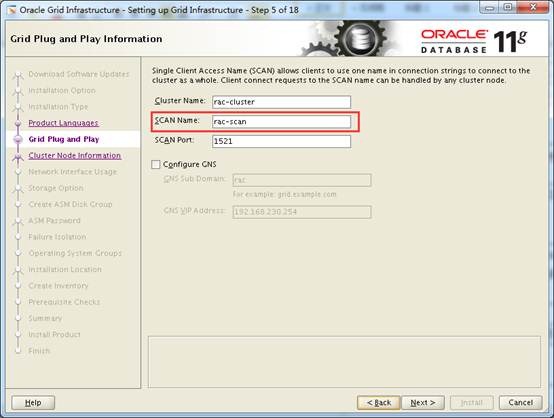

修改SCAN Name。

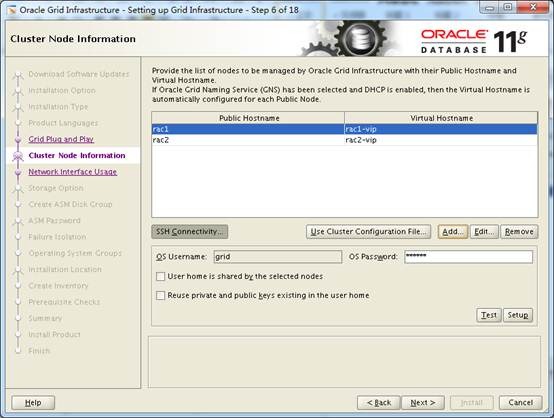

点击Add,增加节点。

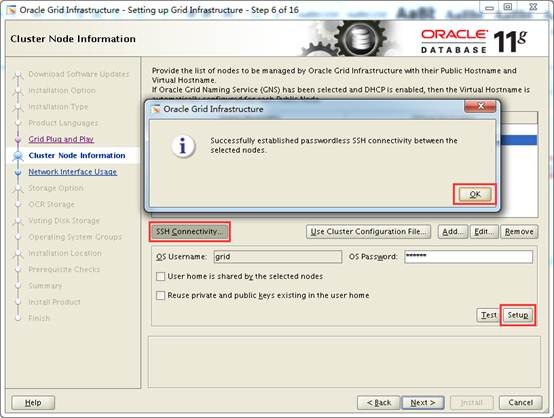

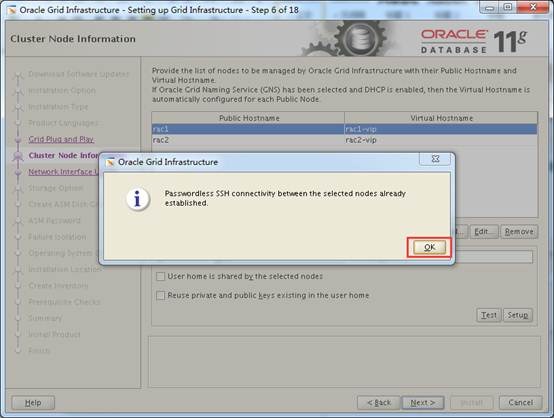

配置SSH等价。

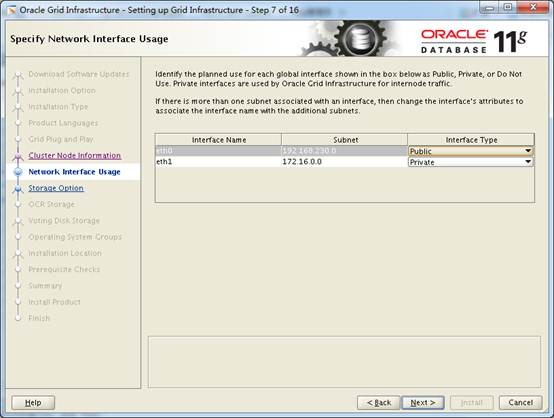

自动获取到前面配置的网络。

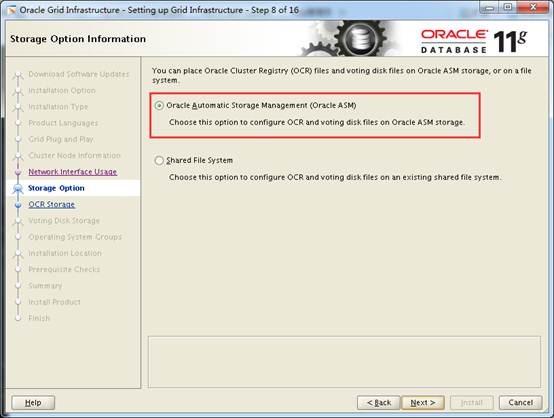

选择ASM。

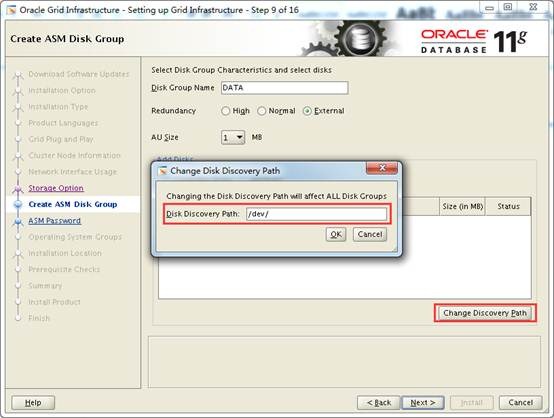

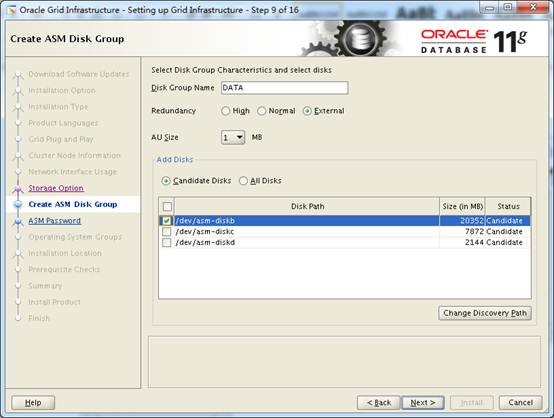

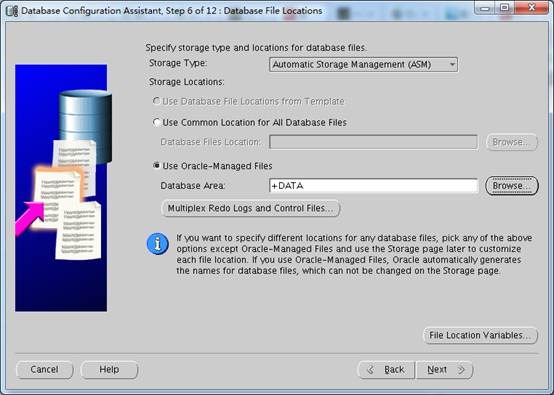

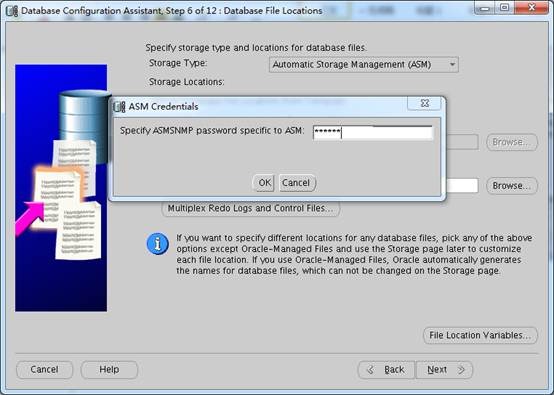

选择磁盘发现路径,创建一个磁盘组,此处只能创建一个。

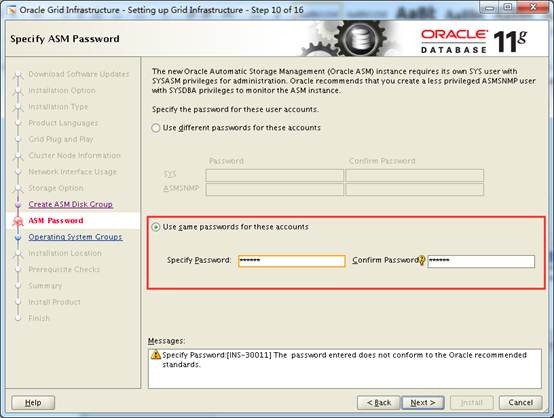

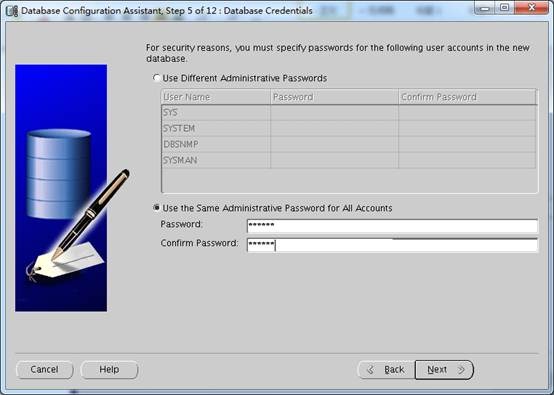

设置密码。

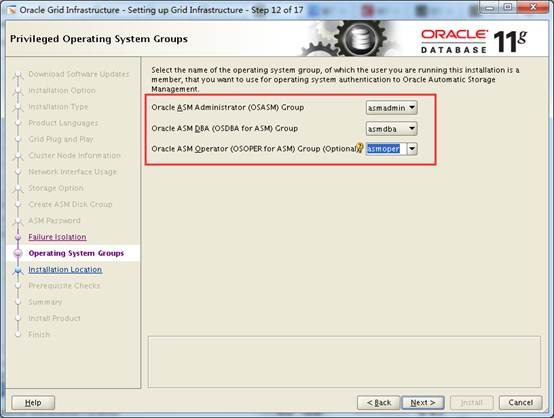

选择管理组。

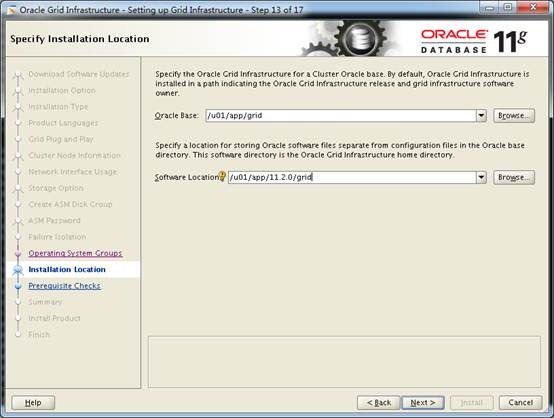

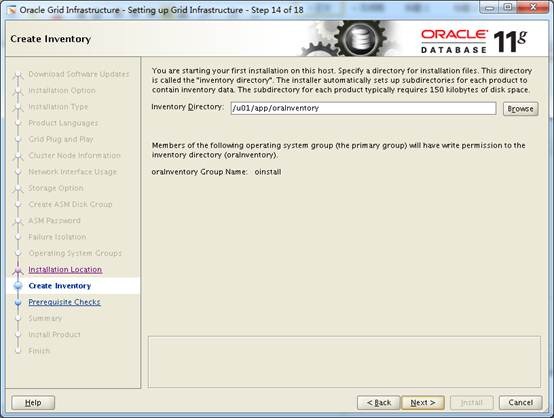

指定安装路径。

指定软件仓库目录。

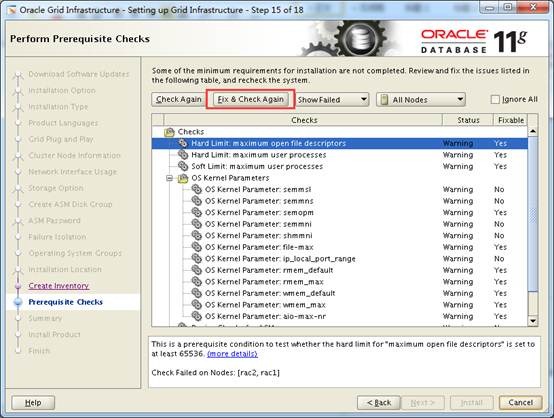

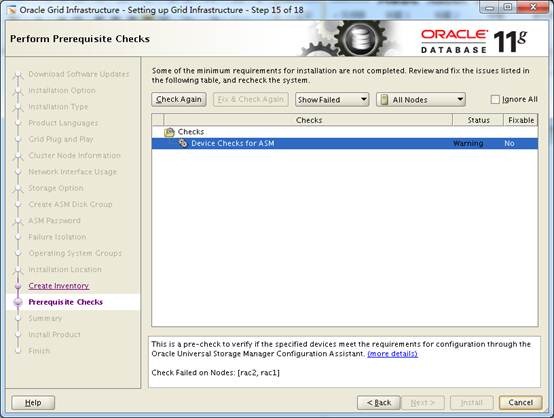

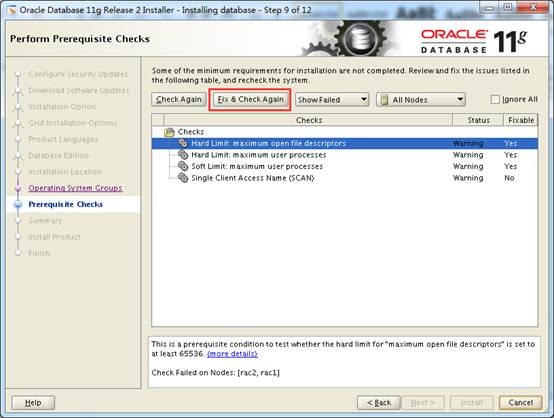

先决条件检查后,列出检查失败项,点击Fix&Check Again进行修复和再次检查。

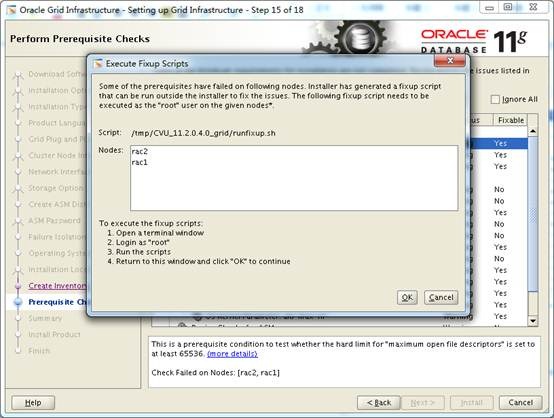

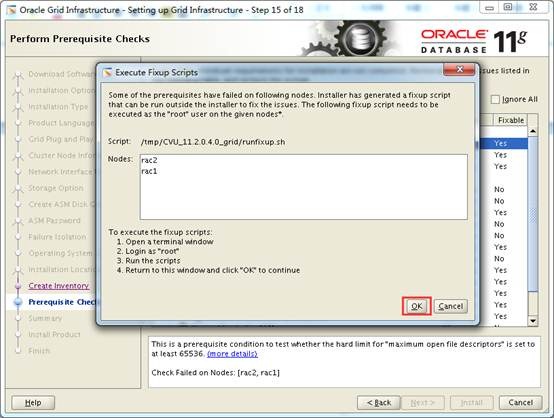

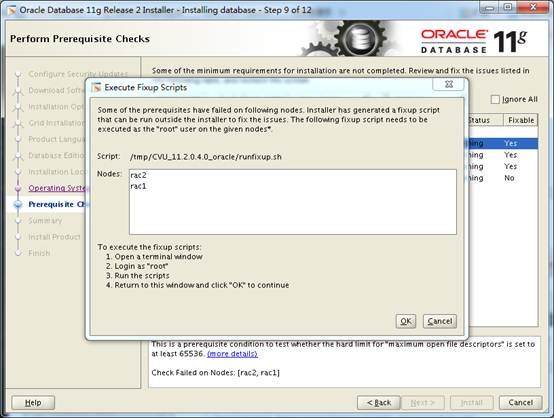

生成修复脚本。

运行脚本进行修复。

[root@rac1 ~]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log

Setting Kernel Parameters...

The value for shmmni in response file is not greater than value of shmmni for current session. Hence not changing it.

The value for semmsl in response file is not greater than value of semmsl for current session. Hence not changing it.

The value for semmns in response file is not greater than value of semmns for current session. Hence not changing it.

The value for semmni in response file is not greater than value of semmni for current session. Hence not changing it.

kernel.sem = 250 32000 100 128

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.wmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

uid=1100(grid) gid=6000(oinstall) groups=6000(oinstall),5000(asmadmin),5001(asmdba),5002(asmoper)

[root@rac2 ~]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log

Setting Kernel Parameters...

The value for shmmni in response file is not greater than value of shmmni for current session. Hence not changing it.

The value for semmsl in response file is not greater than value of semmsl for current session. Hence not changing it.

The value for semmns in response file is not greater than value of semmns for current session. Hence not changing it.

The value for semmni in response file is not greater than value of semmni for current session. Hence not changing it.

kernel.sem = 250 32000 100 128

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.wmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

uid=1100(grid) gid=6000(oinstall) groups=6000(oinstall),5000(asmadmin),5001(asmdba),5002(asmoper)

运行完成后,点击Ok,系统再次进行检查。

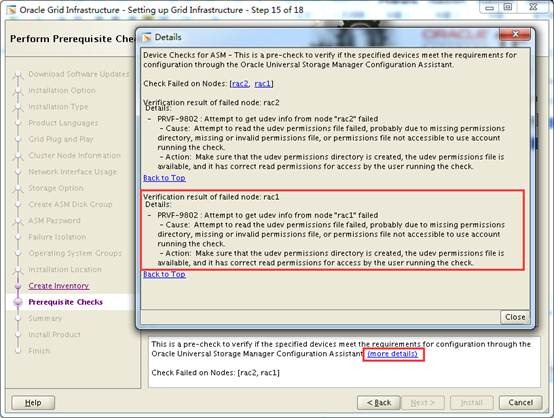

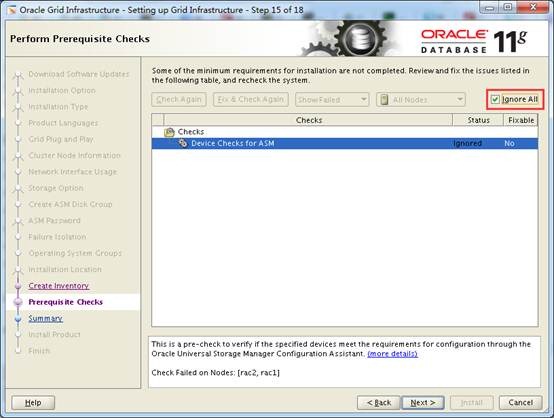

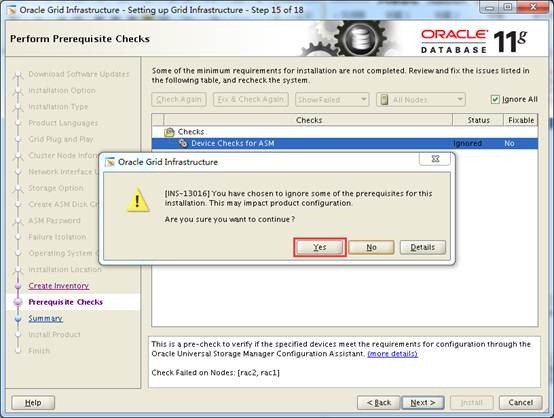

由于没有使用ORACLE的ASMlib驱动,故有ASM Check的警告,只要确认前面已经识别到ASM磁盘组,就可以忽略ASM Check的警告。

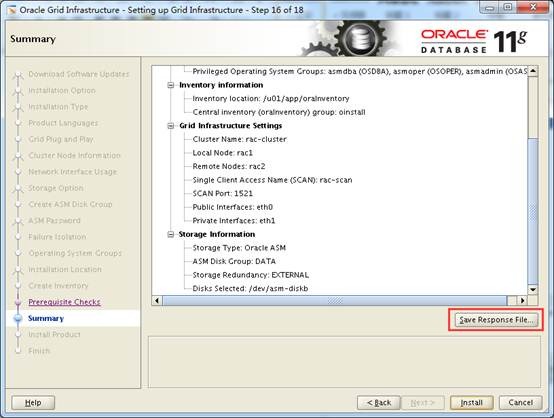

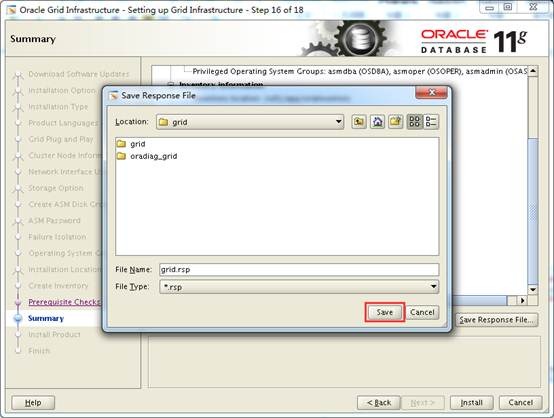

保存响应文件。

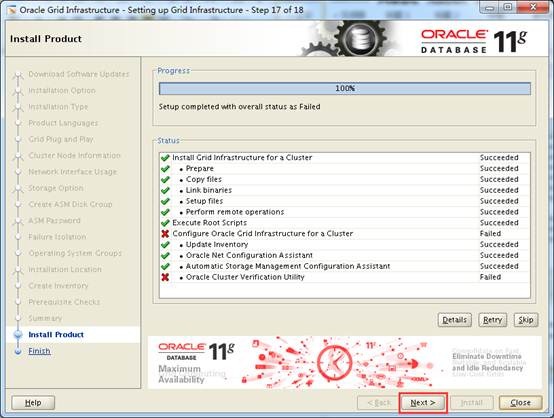

进行安装。

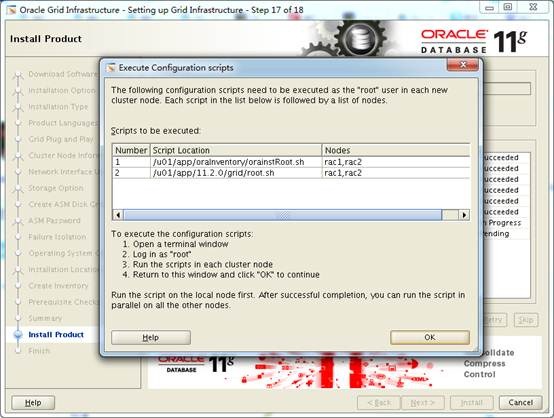

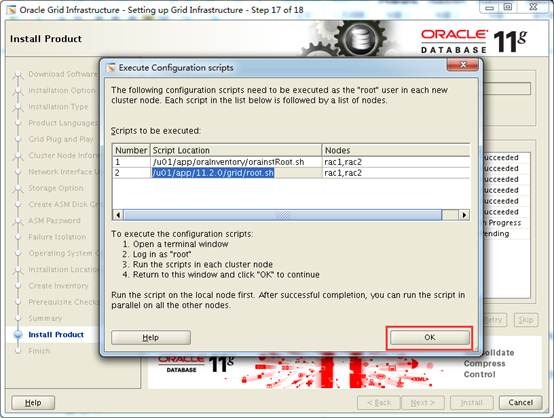

提示执行脚本,按照步骤进行执行。

[root@rac1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac1 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

Failed to create keys in the OLR, rc = 127, Message:

/u01/app/11.2.0/grid/bin/clscfg.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory

Failed to create keys in the OLR at /u01/app/11.2.0/grid/crs/install/crsconfig_lib.pm line 7660.

/u01/app/11.2.0/grid/perl/bin/perl -I/u01/app/11.2.0/grid/perl/lib -I/u01/app/11.2.0/grid/crs/install /u01/app/11.2.0/grid/crs/install/rootcrs.pl execution failed

[root@rac1 ~]# yum install libcap.so.1

[root@rac1 ~]# cd /lib64

[root@rac1 lib64]# ln -s libcap.so.2.16 libcap.so.1

[root@rac1 ~]# /u01/app/11.2.0/grid/crs/install/rootcrs.pl -verbose -deconfig -force

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

PRCR-1119 : Failed to look up CRS resources of ora.cluster_vip_net1.type type

PRCR-1068 : Failed to query resources

Cannot communicate with crsd

PRCR-1070 : Failed to check if resource ora.gsd is registered

Cannot communicate with crsd

PRCR-1070 : Failed to check if resource ora.ons is registered

Cannot communicate with crsd

/u01/app/11.2.0/grid/bin/crsctl.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory

/u01/app/11.2.0/grid/bin/crsctl.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory

################################################################

# You must kill processes or reboot the system to properly #

# cleanup the processes started by Oracle clusterware #

################################################################

ACFS-9313: No ADVM/ACFS installation detected.

Removing Trace File Analyzer

Failure in execution (rc=-1, 256, No such file or directory) for command /etc/init.d/ohasd deinstall

Successfully deconfigured Oracle clusterware stack on this node

[root@rac1 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to upstart

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1'

CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1'

CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1'

CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded

ASM created and started successfully.

Disk Group DATA created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 928c8db2cb4f4f0abf52bf43726856e0.

Successfully replaced voting disk group with +DATA.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 928c8db2cb4f4f0abf52bf43726856e0 (/dev/asm-diskb) [DATA]

Located 1 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'rac1'

CRS-2676: Start of 'ora.asm' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.DATA.dg' on 'rac1'

CRS-2676: Start of 'ora.DATA.dg' on 'rac1' succeeded

Preparing packages for installation...

cvuqdisk-1.0.9-1

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

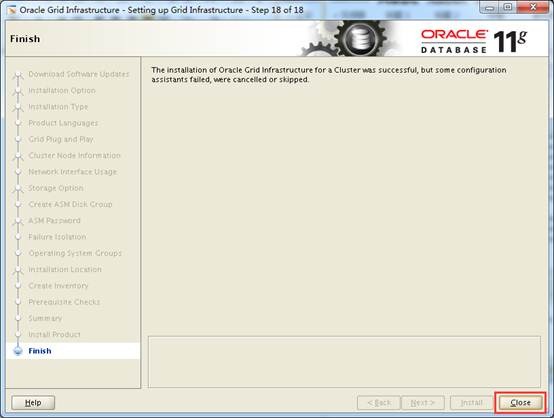

执行完成后,点击Ok。

忽略最后的验证错误。导致这个错误的原因是在/etc/hosts中配置了SCAN的地址,尝试ping这个地址信息,如果可以成功,则这个错误可以忽略。

分别在两个节点上查看如下信息:

(1)网卡信息

[grid@rac1 grid]$ ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:17:B8:04

inet addr:192.168.230.129 Bcast:192.168.230.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe17:b804/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1654622 errors:0 dropped:0 overruns:0 frame:0

TX packets:3893712 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:308907725 (294.5 MiB) TX bytes:5036569325 (4.6 GiB)

eth0:1 Link encap:Ethernet HWaddr 00:0C:29:17:B8:04

inet addr:192.168.230.139 Bcast:192.168.230.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth0:2 Link encap:Ethernet HWaddr 00:0C:29:17:B8:04

inet addr:192.168.230.150 Bcast:192.168.230.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth1 Link encap:Ethernet HWaddr 00:0C:29:17:B8:0E

inet addr:172.16.0.11 Bcast:172.16.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe17:b80e/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:67682 errors:0 dropped:0 overruns:0 frame:0

TX packets:44173 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:31299407 (29.8 MiB) TX bytes:24363245 (23.2 MiB)

eth1:1 Link encap:Ethernet HWaddr 00:0C:29:17:B8:0E

inet addr:169.254.44.97 Bcast:169.254.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:31268 errors:0 dropped:0 overruns:0 frame:0

TX packets:31268 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:27184931 (25.9 MiB) TX bytes:27184931 (25.9 MiB)

(2)后台进程信息

[grid@rac1 grid]$ ps -ef|egrep -i "asm|listener"

grid 42014 1 0 19:51 ? 00:00:00 asm_pmon_+ASM1

grid 42016 1 0 19:51 ? 00:00:00 asm_psp0_+ASM1

grid 42018 1 1 19:51 ? 00:01:11 asm_vktm_+ASM1

grid 42022 1 0 19:51 ? 00:00:00 asm_gen0_+ASM1

grid 42024 1 0 19:51 ? 00:00:03 asm_diag_+ASM1

grid 42026 1 0 19:51 ? 00:00:00 asm_ping_+ASM1

grid 42028 1 0 19:51 ? 00:00:06 asm_dia0_+ASM1

grid 42030 1 0 19:51 ? 00:00:08 asm_lmon_+ASM1

grid 42032 1 0 19:51 ? 00:00:05 asm_lmd0_+ASM1

grid 42034 1 0 19:51 ? 00:00:13 asm_lms0_+ASM1

grid 42038 1 0 19:51 ? 00:00:00 asm_lmhb_+ASM1

grid 42040 1 0 19:51 ? 00:00:00 asm_mman_+ASM1

grid 42042 1 0 19:51 ? 00:00:00 asm_dbw0_+ASM1

grid 42044 1 0 19:51 ? 00:00:00 asm_lgwr_+ASM1

grid 42046 1 0 19:51 ? 00:00:00 asm_ckpt_+ASM1

grid 42048 1 0 19:51 ? 00:00:00 asm_smon_+ASM1

grid 42050 1 0 19:51 ? 00:00:01 asm_rbal_+ASM1

grid 42052 1 0 19:51 ? 00:00:00 asm_gmon_+ASM1

grid 42054 1 0 19:51 ? 00:00:00 asm_mmon_+ASM1

grid 42056 1 0 19:51 ? 00:00:00 asm_mmnl_+ASM1

grid 42058 1 0 19:51 ? 00:00:00 asm_lck0_+ASM1

grid 42108 1 0 19:51 ? 00:00:00 oracle+ASM1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq)))

grid 42133 1 0 19:51 ? 00:00:01 oracle+ASM1_ocr (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq)))

grid 42138 1 0 19:51 ? 00:00:00 asm_asmb_+ASM1

grid 42140 1 0 19:51 ? 00:00:00 oracle+ASM1_asmb_+asm1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq)))

grid 42645 1 0 19:52 ? 00:00:00 oracle+ASM1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq)))

grid 43130 1 0 19:53 ? 00:00:00 /u01/app/11.2.0/grid/bin/tnslsnr LISTENER_SCAN1 -inherit

grid 46617 1 0 20:12 ? 00:00:00 /u01/app/11.2.0/grid/bin/tnslsnr LISTENER -inherit

grid 58540 53617 0 21:05 pts/0 00:00:00 egrep -i asm|listener

(3)集群状态

[grid@rac1 grid]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

(4)资源状态

[grid@rac1 grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.registry.acfs

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

(5)集群节点

[grid@rac1 grid]$ olsnodes -n

rac1 1

rac2 2

(6)集群版本

[grid@rac1 grid]$ crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [11.2.0.4.0]

(7)集群注册文件

[grid@rac1 grid]$ ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 2744

Available space (kbytes) : 259376

ID : 760182332

Device/File Name : +DATA

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check bypassed due to non-privileged user

(8)检查votedisk

[grid@rac1 grid]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 928c8db2cb4f4f0abf52bf43726856e0 (/dev/asm-diskb) [DATA]

Located 1 voting disk(s).

(9)检查asm

[grid@rac1 grid]$ srvctl config asm -a

ASM home: /u01/app/11.2.0/grid

ASM listener: LISTENER

ASM is enabled.

[grid@rac1 ~]$ sqlplus / as sysasm

SQL*Plus: Release 11.2.0.4.0 Production on Sat Jul 11 21:22:44 2015

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Real Application Clusters and Automatic Storage Management options

SQL> show parameter spfile

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

spfile string +DATA/rac-cluster/asmparameter

file/registry.253.884807351

SQL> select path from v$asm_disk;

PATH

--------------------------------------------------------------------------------

/dev/asm-diskc

/dev/asm-diskd

/dev/asm-diskb

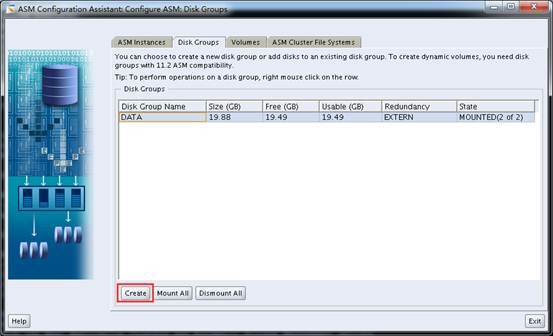

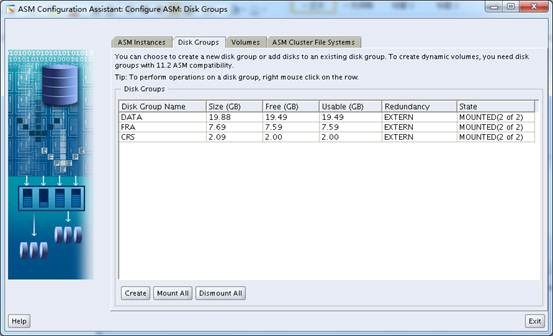

使用grid用户执行asmca,创建名为FRA和CRS的ASM磁盘组

[grid@rac1 ~]$ export DISPLAY=192.168.230.1:0.0

[grid@rac1 ~]$ asmca

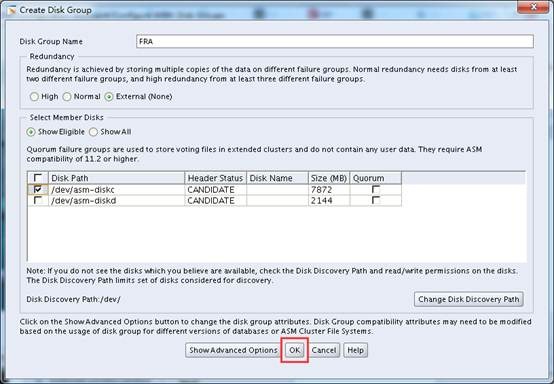

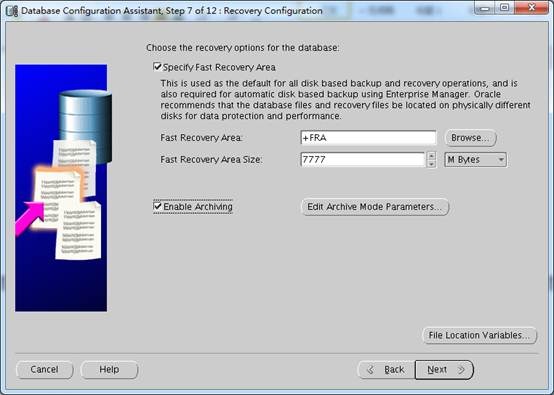

创建FRA磁盘组。

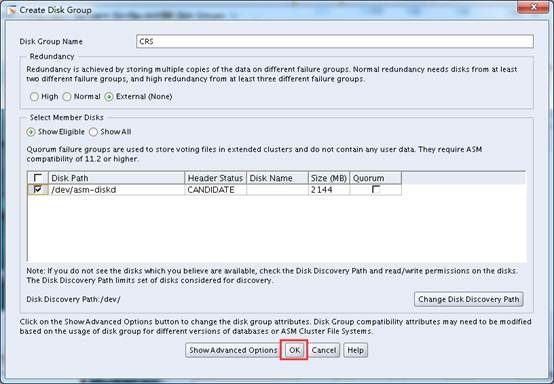

创建CRS磁盘组。

在oracle用户的home目录下解压database安装包。

[root@rac1 ~]# su - oracle

[oracle@rac1 ~]$ unzip /mnt/hgfs/data/oracle/software/11204/p13390677_112040_Linux-x86-64_1of7.zip

[oracle@rac1 ~]$ unzip /mnt/hgfs/data/oracle/software/11204/p13390677_112040_Linux-x86-64_2of7.zip

[oracle@rac1 ~]$ export DISPLAY=192.168.230.1:0.0

[oracle@rac1 ~]$ cd database/

[oracle@rac1 database]$ ./runInstaller

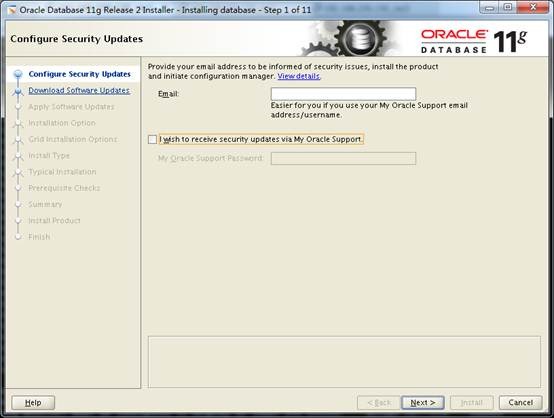

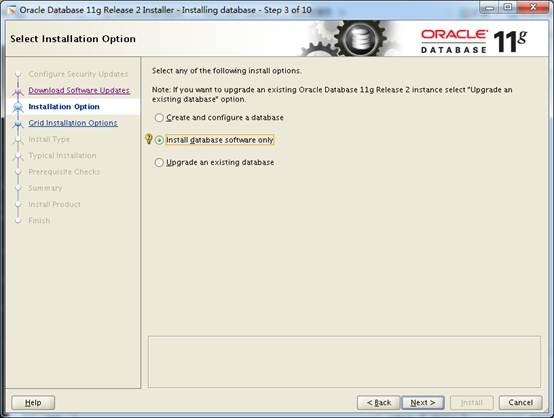

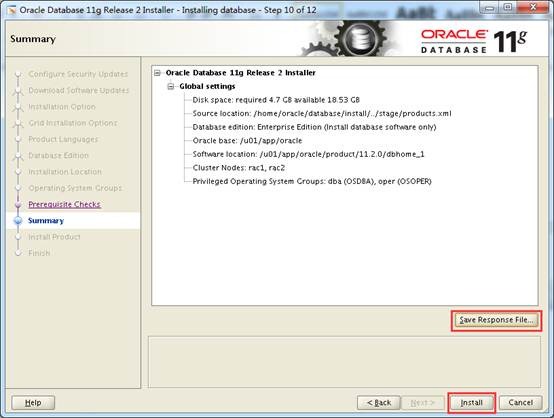

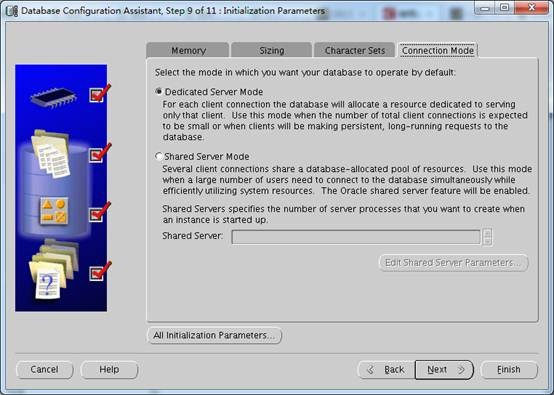

忽略获取安全更新。

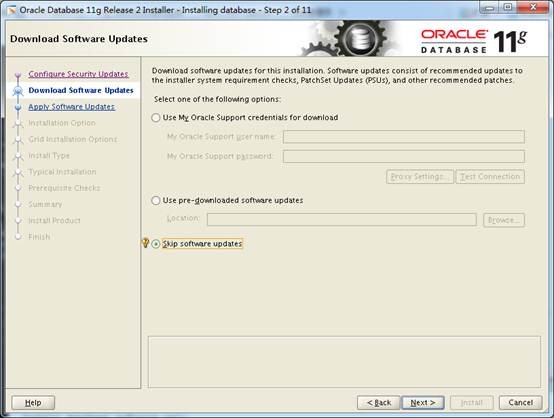

忽略软件更新。

先只安装数据库。

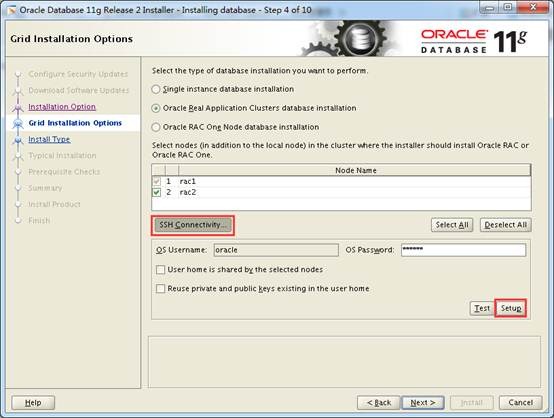

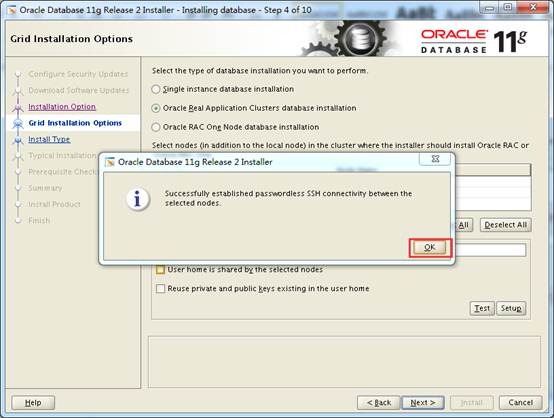

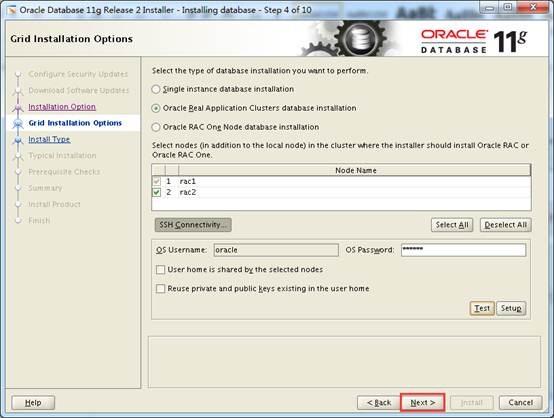

自动配置SSH等价性。

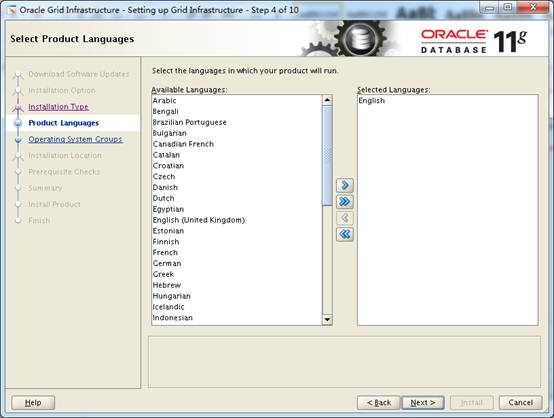

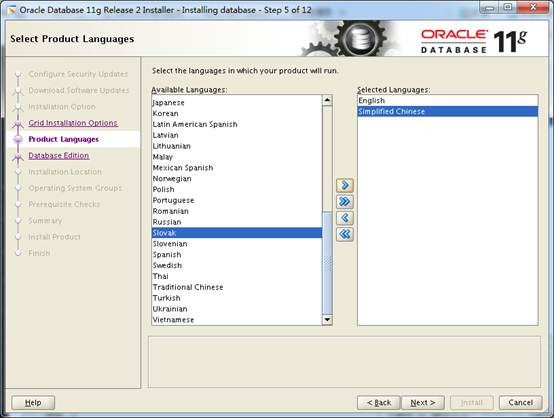

选择语言。

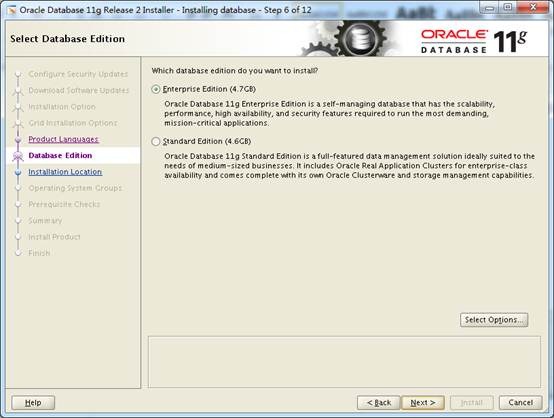

选择企业版。

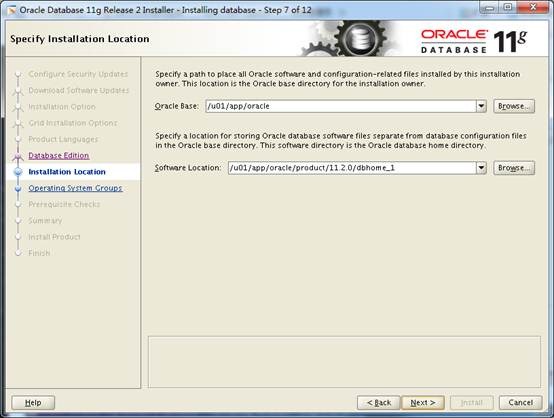

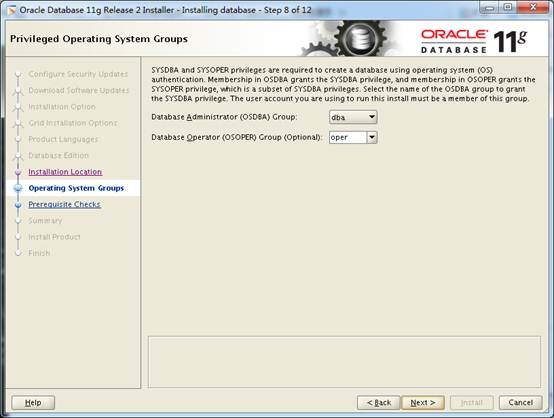

选择组。

先决条件检查后,列出检查失败项,点击Fix&Check Again进行修复和再次检查。

[root@rac1 ~]# /tmp/CVU_11.2.0.4.0_oracle/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_oracle/orarun.log

uid=1101(oracle) gid=6000(oinstall) groups=6000(oinstall),5001(asmdba),6001(dba),6002(oper)

[root@rac2 ~]# /tmp/CVU_11.2.0.4.0_oracle/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_oracle/orarun.log

uid=1101(oracle) gid=6000(oinstall) groups=6000(oinstall),5001(asmdba),6001(dba),6002(oper)

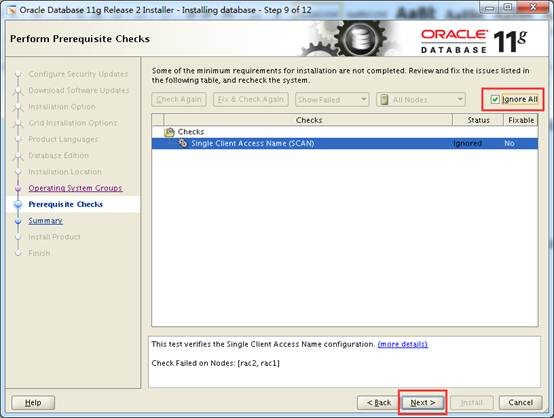

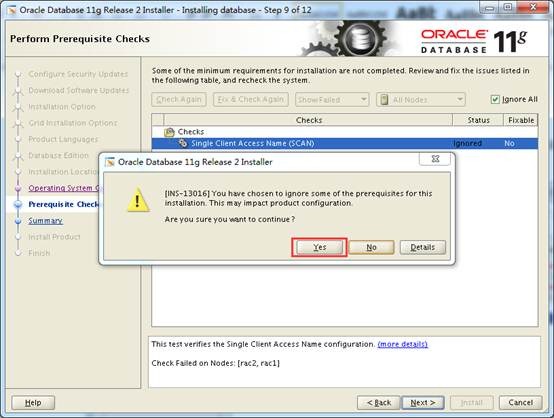

忽略单个SCAN IP的警告。

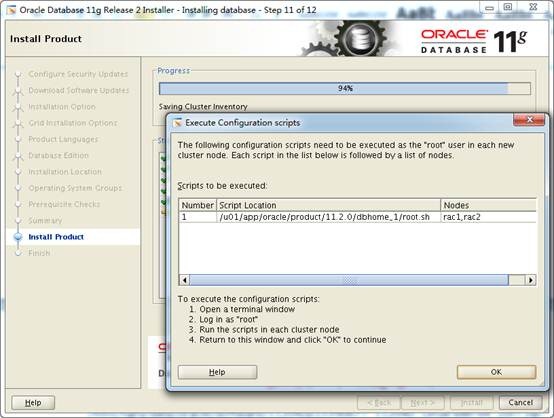

保存响应文件,然后开始安装。

[root@rac1 ~]# /tmp/CVU_11.2.0.4.0_oracle/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_oracle/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_oracle/orarun.log

uid=1101(oracle) gid=6000(oinstall) groups=6000(oinstall),5001(asmdba),6001(dba),6002(oper)

[root@rac1 ~]# /u01/app/oracle/product/11.2.0/dbhome_1/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/11.2.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

[root@rac2 ~]# /u01/app/oracle/product/11.2.0/dbhome_1/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/11.2.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

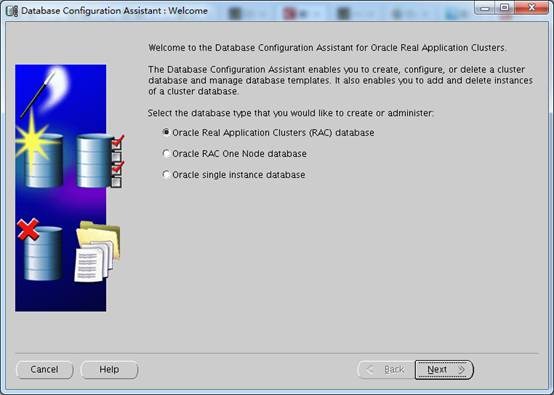

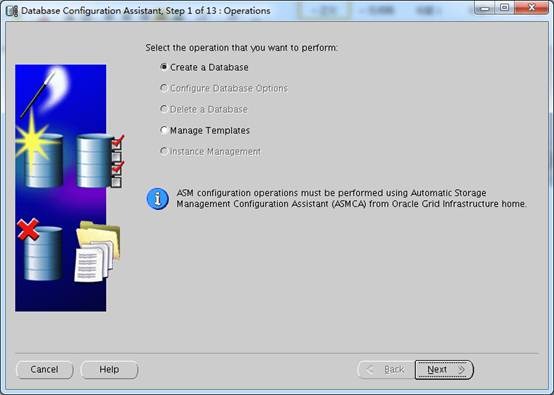

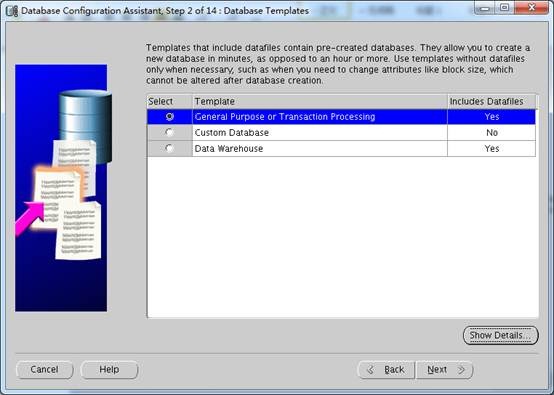

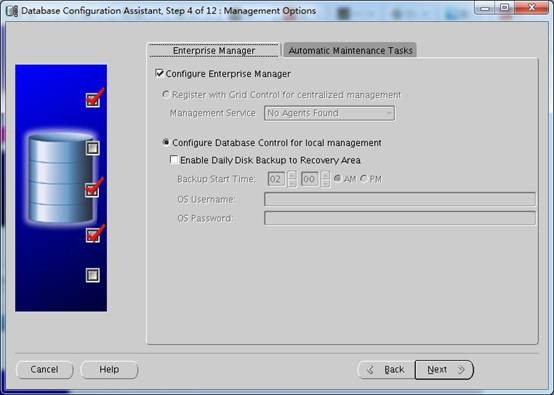

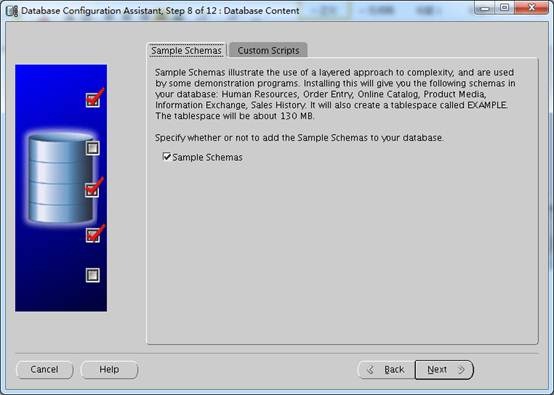

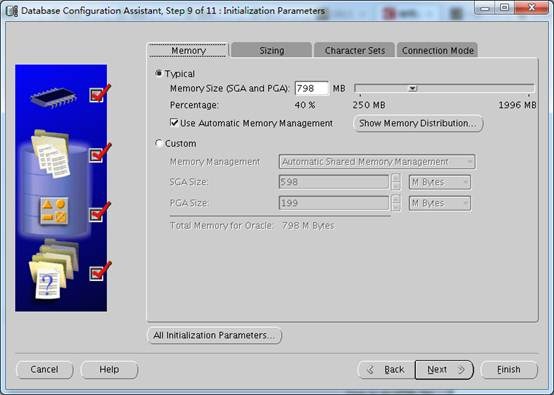

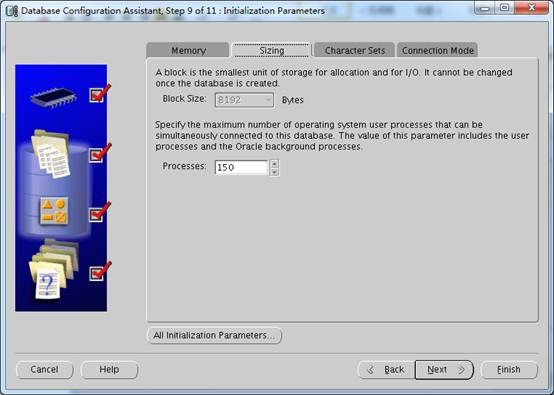

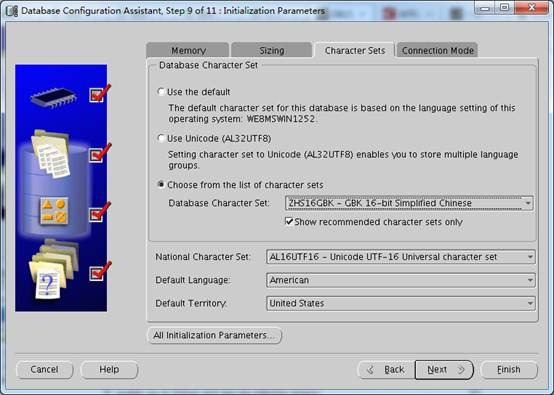

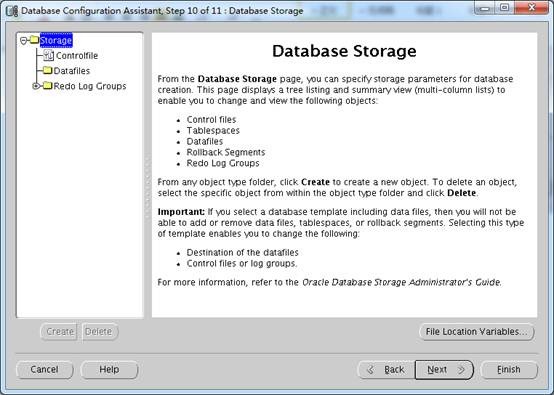

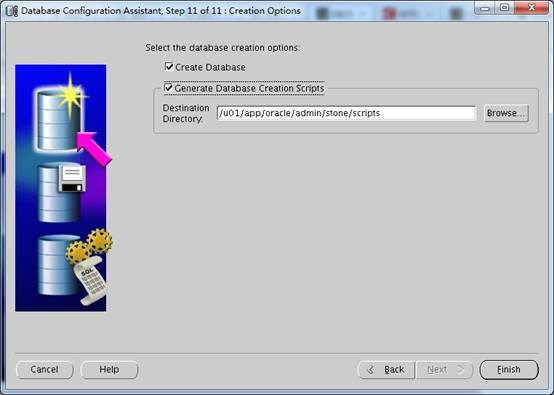

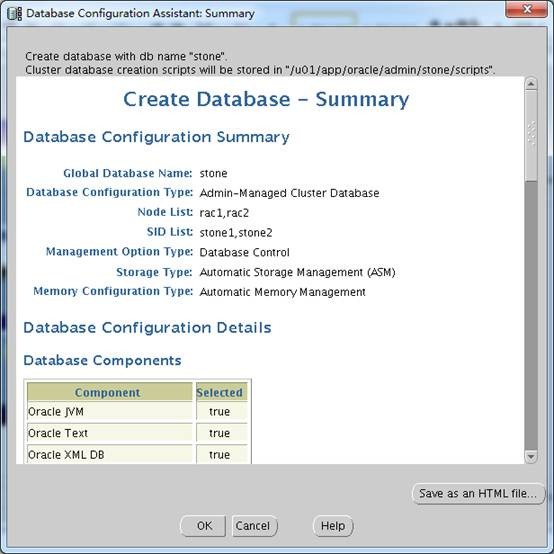

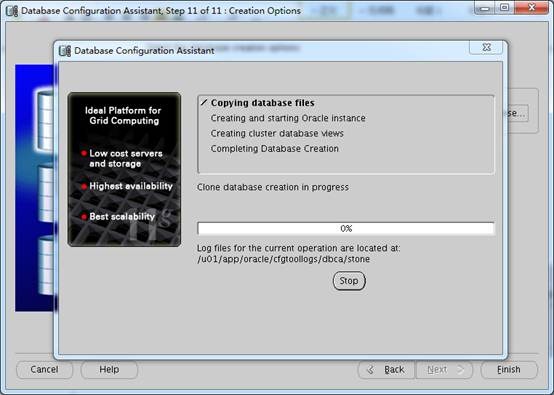

[oracle@rac1 database]$ dbca

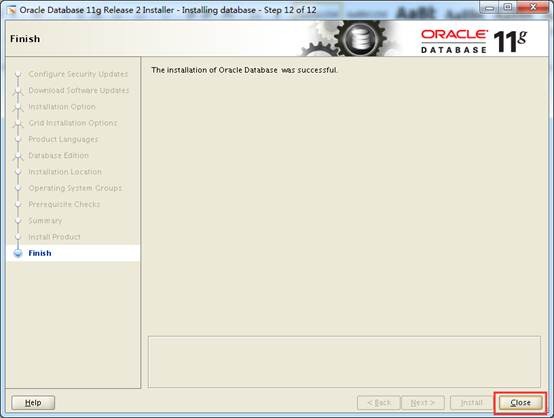

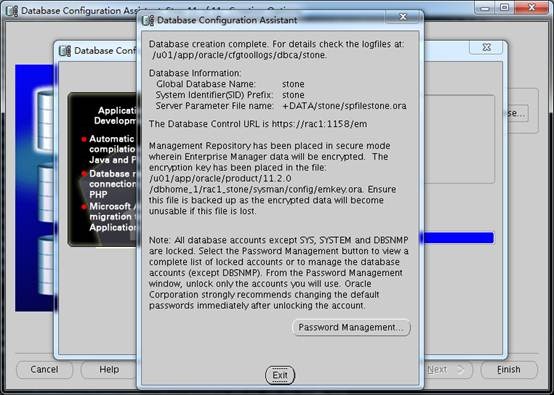

完成后进行检查。

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.FRA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.registry.acfs

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

ora.stone.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

[oracle@rac1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Sat Jul 11 23:57:20 2015

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to an idle instance.

SQL> startup

ORA-01078: failure in processing system parameters

LRM-00109: could not open parameter file '/u01/app/oracle/product/11.2.0/dbhome_1/dbs/initracdb1.ora'

[oracle@rac1 ~]$ cd /u01/app/oracle/admin/stone/pfile/

[oracle@rac1 pfile]$ cp init.ora.6112015233250 /u01/app/oracle/product/11.2.0/dbhome_1/dbs/initracdb1.ora

SQL> startup

ORA-00845: MEMORY_TARGET not supported on this system

[root@rac1 ~]# vim /etc/fstab

[root@rac1 ~]# grep tmpfs /etc/fstab

tmpfs /dev/shm tmpfs defaults,size=2G 0 0

[root@rac1 ~]# mount -o remount /dev/shm/

SQL> startup

ORA-29760: instance_number parameter not specified

[oracle@rac1 ~]$ export ORACLE_SID=stone1

[oracle@rac1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Sun Jul 12 00:21:01 2015

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

SQL> select instance_name from v$instance;

INSTANCE_NAME

----------------

stone1

SQL> select name from v$database;

NAME

---------

STONE

SQL> select INSTANCE_NAME,HOST_NAME,VERSION,STARTUP_TIME,STATUS,ACTIVE_STATE,INSTANCE_ROLE,DATABASE_STATUS from gv$INSTANCE;

INSTANCE_NAME HOST_NAME VERSION STARTUP_T STATUS ACTIVE_ST INSTANCE_ROLE DATABASE_STATUS

---------------- ---------- ----------------- --------- ------------ --------- ------------------ -----------------

stone1 rac1 11.2.0.4.0 11-JUL-15 OPEN NORMAL PRIMARY_INSTANCE ACTIVE

stone2 rac2 11.2.0.4.0 11-JUL-15 OPEN NORMAL PRIMARY_INSTANCE ACTIVE