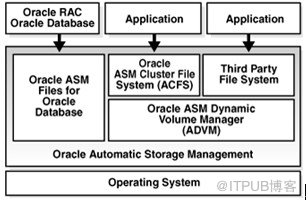

11g环境 RAC + ASM之间层次架构如下图所示:

而Oracle10g的RAC环境中,CRS的信息放在裸设备上,因此关闭asm后才关闭CRS才是正确的。

Oracle High Availability Services Daemon (OHASD) :This process anchors the lower part of the Oracle Clusterware stack, which consists of processes that facilitate cluster operations.

在11gR2里面启动CRS的时候,会提示ohasd已经启动。 那么这个OHASD到底包含哪些资源。 可以通过如下命令来查看:

[grid@oracle1 ~]$ crsctl status resource -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATADG.dg

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

ora.FRADG.dg

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

ora.LISTENER.lsnr

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

ora.OCRVT.dg

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

ora.asm

ONLINE ONLINE oracle1 Started

ONLINE ONLINE oracle2 Started

ora.gsd

OFFLINE OFFLINE oracle1

OFFLINE OFFLINE oracle2

ora.net1.network

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

ora.ons

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

ora.registry.acfs

ONLINE ONLINE oracle1

ONLINE ONLINE oracle2

--------------------------------------------------------------------------------

Cluster Resources

-------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE oracle1

ora.cvu

1 ONLINE ONLINE oracle2

ora.oc4j

1 ONLINE ONLINE oracle2

ora.oracle1.vip

1 ONLINE ONLINE oracle1

ora.oracle2.vip

1 ONLINE ONLINE oracle2

ora.scan1.vip

1 ONLINE ONLINE oracle1

ora.sjjczr.db

1 ONLINE ONLINE oracle1 Open

2 ONLINE ONLINE oracle2 Open

[grid@oracle1 ~]$

在Oracle 10g中CRS Resource 包括GSD(Global Serveice Daemon),ONS(Oracle Notification Service),VIP, Database, Instance 和 Service。在11.2中,对CRSD资源进行了重新分类:Local Resources和Cluster Resources。OHASD指的就是Cluster Resource.

1.Oracle 11g RAC关闭和启动相关命令

1.1 使用crsctl stop has/crsctl stop crs

用root用户,在Oracle11gR2中停止和启动集群的命令如下:

# crsctl stop has [-f]

# crsctl start has

对于crsctl stop has 只有一个可选的参数就是-f,该命令只能停执行该命令服务器上的HAS.而不能停所有节点上的。所以要把RAC 全部停掉,需要在所有节点执行该命令。

下面的2个命令:使用crs 和 使用has 效果是完全一样的:

# crsctl stop crs [-f]

# crsctl start crs

记得第一次在生产环境中安装Oracle 11gR2 RAC + ASM时,当安装完后通过crs_stat -t

命令查看状态总觉得不太对(在节点二上是的online状态的资源数量比节点一明显少得多),想重启CRS和ASM,用了#crsctl stop

crs命令(未带 [ -f ] 参数),总是不太顺利。最终重启了服务器。后来想想,实际上也可以使用crsctl stop has

[-f],没比要重启服务器。

启动HAS:

[root@rac1bin]# ./crsctl start has

CRS-4123:Oracle High Availability Services has been started.

[root@rac1bin]#

从上面看只是启动了HAS。实际上后面会把Oracle Restart 管理的资源都会启动。这个可以使用crs_stat命令来进程验证。

[grid@oracle1 ~]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora.DATADG.dg ora....up.type 0/5 0/ ONLINE ONLINE oracle1

ora.FRADG.dg ora....up.type 0/5 0/ ONLINE ONLINE oracle1

ora....ER.lsnr ora....er.type 0/5 0/ ONLINE ONLINE oracle1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE oracle1

ora.OCRVT.dg ora....up.type 0/5 0/ ONLINE ONLINE oracle1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE oracle1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE oracle2

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE oracle1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE oracle2

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE oracle1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE oracle1

ora....E1.lsnr application 0/5 0/0 ONLINE ONLINE oracle1

ora....le1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora....le1.ons application 0/3 0/0 ONLINE ONLINE oracle1

ora....le1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE oracle1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE oracle2

ora....E2.lsnr application 0/5 0/0 ONLINE ONLINE oracle2

ora....le2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora....le2.ons application 0/3 0/0 ONLINE ONLINE oracle2

ora....le2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE oracle2

ora....ry.acfs ora....fs.type 0/5 0/ ONLINE ONLINE oracle1

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE oracle1

ora.sjjczr.db ora....se.type 0/2 0/1 ONLINE ONLINE oracle1

1.2 使用crsctl stop cluster [-all]…

该命令的语法如下:

crsctl stop cluster [[-all]|[-n[...]]] [-f]

crsctl start cluster [[-all]|[-n[...]]]

该参数支持的选项更多,可以同时操控所有的节点。如果不指定参数,那么只对当前节点有效。

如:

[root@rac1 ~]# ./crsctl start cluster -n rac1 rac2

--停止当前节点集群:

[root@rac1 bin]# ./crsctl stop cluster

2.停止和启动Resource

当直接停止集群时,相关的Resource 也会被停止。 但实际情况下,我们操作更多的是对某些资源的启动或关闭等操作。具体就是使用SRVCTL 命令。该命令不常用总被忘记,因此可以用帮助选项-h 来查看命令帮助:

[grid@rac1 ~]$ Srvclt -h

这个命令显示的结果太长,不好查看,可以进一步的查看帮助:

[grid@rac1 ~]$ srvctl start -h

RAC 运行状态通用的命令如下:

[root@vcdwdb1 ~]# su - grid

[grid@vcdwdb1 ~]$ srvctl -h

用法: srvctl [-V] --显示内容很多,如下对内容进行了挑选后粘贴

用法: srvctl start nodeapps [-n ] [-g] [-v]

用法: srvctl stop nodeapps [-n ] [-g] [-f] [-r] [-v]

用法: srvctl status nodeapps

用法: srvctl start vip { -n | -i } [-v]

用法: srvctl stop vip { -n | -i } [-f] [-r] [-v]

用法: srvctl relocate vip -i [-n ] [-f] [-v]

用法: srvctl status vip { -n | -i } [-v]

用法: srvctl start asm [-n ] [-o ]

用法: srvctl stop asm [-n ] [-o ] [-f]

用法: srvctl config asm [-a]

用法: srvctl status asm [-n ] [-a] [-v]

用法: srvctl config listener [-l ] [-a]

用法: srvctl start listener [-l ] [-n ]

用法: srvctl stop listener [-l ] [-n ] [-f]

用法: srvctl status listener [-l ] [-n ] [-v]

用法: srvctl start scan [-i ] [-n ]

用法: srvctl stop scan [-i ] [-f]

用法: srvctl relocate scan -i [-n ]

用法: srvctl status scan [-i ] [-v]

用法: srvctl config cvu

用法: srvctl start cvu [-n ]

用法: srvctl stop cvu [-f]

用法: srvctl relocate cvu [-n ]

用法: srvctl status cvu [-n ]

也可以进一步查看配置信息,如下:

[grid@vcdwdb1 ~]$ srvctl start -h

SRVCTL start 命令启动启用 Oracle Clusterware 的未运行的对象。

用法: srvctl start database -d [-o ] [-n ]

用 法: srvctl start instance -d {-n [-i ] | -i } [-o ]

用法: srvctl start service -d [-s "" [-n | -i ] ] [-o ]

用法: srvctl start nodeapps [-n ] [-g] [-v]

用法: srvctl start vip { -n | -i } [-v]

用法: srvctl start asm [-n ] [-o ]

用法: srvctl start listener [-l ] [-n ]

用法: srvctl start scan [-i ] [-n ]

用法: srvctl start scan_listener [-n ] [-i ]

用法: srvctl start oc4j [-v]

用法: srvctl start home -o -s -n

用法: srvctl start filesystem -d [-n ]

用法: srvctl start diskgroup -g [-n ""]

用法: srvctl start gns [-l ] [-n ] [-v]

用法: srvctl start cvu [-n ]

有关各个命令和对象的详细帮助, 请使用:

srvctl

-h

[grid@vcdwdb1 ~]$ srvctl start listener -h

启动监听程序。

用法: srvctl start listener [-l ] [-n ]

-l 监听程序名

-n 节点名

-h 输出用法

[grid@vcdwdb1 ~]$ srvctl status -h

SRVCTL status 命令显示对象的当前状态。

用法: srvctl status database -d [-f] [-v]

用法: srvctl status instance -d {-n | -i } [-f] [-v]

用法: srvctl status service -d [-s ""] [-f] [-v]

用法: srvctl status nodeapps

用法: srvctl status vip { -n | -i } [-v]

用法: srvctl status listener [-l ] [-n ] [-v]

用法: srvctl status asm [-n ] [-a] [-v]

用法: srvctl status scan [-i ] [-v]

用法: srvctl status scan_listener [-i ] [-v]

用法: srvctl status srvpool [-g ] [-a]

用法: srvctl status server -n "" [-a]

用法: srvctl status oc4j [-n ] [-v]

用法: srvctl status home -o -s -n

用法: srvctl status filesystem -d [-v]

用法: srvctl status diskgroup -g [-n ""] [-a] [-v]

用法: srvctl status cvu [-n ]

用法: srvctl status gns [-n ] [-v]

有关各个命令和对象的详细帮助, 请使用:

srvctl

-h

[grid@vcdwdb1 ~]$ srvctl config -h

SRVCTL config 命令显示存储在 OCR 中的对象配置。

用法: srvctl config database [-d [-a] ] [-v]

用法: srvctl config service -d [-s ] [-v]

用法: srvctl config nodeapps [-a] [-g] [-s]

用法: srvctl config vip { -n | -i }

用法: srvctl config network [-k ]

用法: srvctl config asm [-a]

用法: srvctl config listener [-l ] [-a]

用法: srvctl config scan [-i ]

用法: srvctl config scan_listener [-i ]

用法: srvctl config srvpool [-g ]

用法: srvctl config oc4j

用法: srvctl config filesystem -d

用法: srvctl config gns [-a] [-d] [-k] [-m] [-n ] [-p] [-s] [-V] [-q ] [-l] [-v]

用法: srvctl config cvu

有关各个命令和对象的详细帮助, 请使用:

srvctl

-h

例如:

[grid@vcdwdb1 ~]$ srvctl config asm

ASM 主目录: /u01/app/11.2.0/grid_home

ASM 监听程序: LISTENER

[grid@vcdwdb1 ~]$ srvctl config asm -a

ASM 主目录: /u01/app/11.2.0/grid_home

ASM 监听程序: LISTENER

ASM 已启用。

[grid@vcdwdb1 ~]$ srvctl config listener -a

名称: LISTENER

网络: 1, 所有者: grid

主目录:

节点 vcdwdb2,vcdwdb1 上的 /u01/app/11.2.0/grid_home

端点: TCP:1521

在Oracle 11g环境中,Oracle的关闭和启动顺序如下:

关闭过程(关闭数据库->关闭CRS集群)

1、关闭数据库:

用oracl用户执行srvctl命令

语法:srvctl stop database -d dbname [-o immediate]

作用:可以一次性关闭dbname的所有实例

[oracle@rac1 ~]# su - oracle

[oracle@oeltan1 ~]$ export ORACLE_UNQNAME=orcl

[oracle@oeltan1 ~]$ emctl stop dbconsole

[oracle@rac1 ~]$ srvctl stop database -d orcl ----停止所有节点上的实例

然后查看状态:

[oracle@rac1 ~]$ srvctl status database -d orcl

Instance rac1 is not running on node rac1

Instance rac2 is not running on node rac2

2、停止HAS(High Availability Services),必须以root用户

该命令的语法如下:

crsctl stop cluster [[-all]|[-n[...]]] [-f]

crsctl start cluster [[-all]|[-n[...]]]

该参数支持的选项更多,可以同时操控所有的节点。如果不指定参数,那么只对当前节点有效。

[root@rac1 oracle]# cd /u01/grid/11.2.0/grid/bin

[root@rac1 bin]# ./crsctl stop has -f ----停止rac1节点has服务

[root@rac1 bin]# ./crsctl stop crs -f ----停止rac1节点crs服务

[root@rac2 oracle]# cd /u01/grid/11.2.0/grid/bin

[root@rac2 bin]# ./crsctl stop has -f ----停止rac2节点has服务

[root@rac2 bin]# ./crsctl stop crs -f ----停止rac2节点crs服务

对于crsctl stop has只有一个可选的参数就是-f,该命令只能停执行该命令服务器上的HAS而不能停所有节点上的。所以要把RAC全部停掉,需要在所有节点执行该命令。

3、停止节点集群服务,必须以root用户:

[root@rac1 oracle]# cd /u01/grid/11.2.0/grid/bin

停止所有节点服务

[root@rac1 bin]# ./crsctl stop cluster -all

控制所停节点:

[root@rac1 bin]# ./crsctl stop cluster -n rac1 rac2

停止单节点集群服务:

[root@rac1 bin]# ./crsctl stop cluster ----停止rac1节点集群服务

[root@rac2 oracle]# cd /u01/grid/11.2.0/grid/bin

[root@rac2 bin]# ./crsctl stop cluster ----停止rac2节点集群服务

Oracle 11g R2的RAC默认开机会自启动,当然如果需要手工启动:也就是按照Cluster、HAS、Database的顺序启动即可。

4.检查集群进程状态

[root@rac1 bin]# crsctl check cluster

详细输出

[root@rac1 bin]# crs_stat -t -v

只检查本节点的集群状态

[root@rac1 bin]# crsctl check crs

启动过程(启动CRS集群->启动数据库)

5.启动集群CRS

所有节点启动

[root@rac1 bin]# ./crsctl start cluster -n rac1 rac2

CRS-4123: Oracle High Availability Services has been started.

[root@rac1 bin]# ./crsctl start cluster -all

单个节点启动

[root@rac1 oracle]# cd /u01/grid/11.2.0/grid/bin

[root@rac1 bin]# ./crsctl start cluster ----启动rac1节点集群服务

[root@rac2 oracle]# cd /u01/grid/11.2.0/grid/bin

[root@rac2 bin]# ./crsctl start cluster ----启动rac2节点集群服务

[root@rac2 ~]# ./crsctl check cluster

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

此命令会在后台启动所有RAC CRS相关进程

[root@rac2 ~]# ./crs_stat -t -v

CRS-0184: Cannot communicate with the CRS daemon.

因为start has启动的crs进程比较多因此会启动的比较慢,我的机器等待了5分钟,在没有完全启动成功之前会报上述错误,需要耐心等待一段时间后执行下面命令即可查看到所有CRS相关进程服务已经启动。

[root@rac2 ~]# ./crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora.DATA.dg ora....up.type 0/5 0/ ONLINE ONLINE rac1

ora....ER.lsnr ora....er.type 0/5 0/ ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac2

ora....N2.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora....N3.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE rac1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE rac1

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE rac1

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE rac1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE rac1

ora....C1.lsnr application 0/5 0/0 ONLINE ONLINE rac1

ora.rac1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac1.ons application 0/3 0/0 ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE rac2

ora....C2.lsnr application 0/5 0/0 ONLINE ONLINE rac2

ora.rac2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac2.ons application 0/3 0/0 ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac2

ora....ry.acfs ora....fs.type 0/5 0/ ONLINE ONLINE rac1

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac2

ora.scan2.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

ora.scan3.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

说明:

英文解释

ora.gsd is OFFLINE by default ifthere is no 9i database in the cluster.

ora.oc4j is OFFLINE in 11.2.0.1 as DatabaseWorkload Management(DBWLM) is unavailable. these can be ignored in11gR2 RAC.

中文解释

ora.gsd是集群服务中用于与9i数据库进行通信的一个进程,在当前版本中为了向后兼容才保存下来,状态为OFFLINE不影响CRS的正常运行与性能,我们忽略即可

ora.oc4j是在11.2.0.2以上版本中有效的服务进程,用于DBWLM的资源管理,因此在11.2.0.1以下版本并没有使用

6.启动HAS

单一节点启动

[root@rac1 ~]# cd /u01/grid/11.2.0/grid/bin

[root@rac1 ~]# ./crsctl start has -f

[root@rac1 ~]# ./crsctl start crs -f

[root@rac1 ~]# ./crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

对于crsctl start has只有一个可选的参数就是-f,该命令只能启动执行该命令服务器上的HAS而不能启动所有节点上的。所以要把RAC全部启动,需要在所有节点执行该命令。

[root@rac2 ~]# cd /u01/grid/11.2.0/grid/bin

[root@rac2 ~]# ./crsctl start has -f

[root@rac2 ~]# ./crsctl start crs -f

[root@rac2 ~]# ./crsctl check crs -f

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

7.启动数据库:

Oracl用户执行srvctl命令:

语法:srvctl start|stop|status database -d dbname [-o immediate]

作用:可以一次性启动dbname的所有实例

以oracl用户执行srvctl命令:

[oracle@rac1 ~]$ srvctl start database -d orcl ----启动所有节点上的实例

然后查看状态:

[oracle@rac1 ~]$ srvctl status database -d orcl

Instance rac1 is not running on node rac1Instance rac2 is not running on node rac2

启动EM

[oracle@oeltan1 ~]$ emctl start dbconsole

8.详细输出资源全名称并检查状态

crsctl status resource -t

crsctl status resource

9.常用srvctl命令

指定dbname上某个实例

srvctl start|stop|status instance -d -i

10.显示RAC下所有实例配置与状态

srvctl status|config database -d

11.显示所有节点的应用服务(VIP,GSD,listener,ONS)

srvctl start|stop|status nodeapps -n

12.ASM进程服务管理

srvctl start|stop|status|config asm -n [-i ] [-o]

srvctl config asm -a

srvctl status asm -a

13.可以获取所有的环境信息:

srvctl getenv database -d [-i]

14.设置全局环境和变量:

srvctl setenv database -d -t LANG=en

15.在OCR中删除已有的数据库信息

srvctl remove database -d

16.向OCR中添加一个数据库的实例:

srvctl add instance -d -i -n

srvctl add instance -d -i -n

17.检查监听的状态

srvctl status listener

srvctl config listener -a

SCAN配置信息

srvctl config scan

SCAN listener状态信息

srvctl status scan

小结:

crsctl命令是一个集群级别命令,可以对所有集群资源进行统一启动、停止等管理操作

srvctl命令是一个服务级别命令,可以对单一服务资源进行统一启动、停止等管理操作

附:srvctl命令启动与停止的详细帮助

[root@rac2 ~]# srvctl start -h

The SRVCTL start command starts, Oracle Clusterware enabled, non-running objects.

Usage: srvctl start database -d [-o ] [-n ]

Usage:

srvctl start instance -d {-n

[-i ] | -i } [-o

]

Usage: srvctl start service -d

[-s "" [-n

| -i ] ] [-o ]

Usage: srvctl start nodeapps [-n ] [-g] [-v]

Usage: srvctl start vip { -n | -i } [-v]

Usage: srvctl start asm [-n ] [-o ]

Usage: srvctl start listener [-l ] [-n ]

Usage: srvctl start scan [-i ] [-n ]

Usage: srvctl start scan_listener [-n ] [-i ]

Usage: srvctl start oc4j [-v]

Usage: srvctl start home -o -s -n

Usage: srvctl start filesystem -d [-n ]

Usage: srvctl start diskgroup -g [-n ""]

Usage: srvctl start gns [-l ] [-n ] [-v]

Usage: srvctl start cvu [-n ]

For detailed help on each command and object and its options use:

srvctl

-h

[root@rac2 ~]# srvctl stop -h

The SRVCTL stop command stops, Oracle Clusterware enabled, starting or running objects.

Usage: srvctl stop database -d [-o ] [-f]

Usage:

srvctl stop instance -d {-n |

-i } [-o ] [-f]

Usage:

srvctl stop service -d [-s

"" [-n | -i

] ] [-f]

Usage: srvctl stop nodeapps [-n ] [-g] [-f] [-r] [-v]

Usage: srvctl stop vip { -n | -i } [-f] [-r] [-v]

Usage: srvctl stop asm [-n ] [-o ] [-f]

Usage: srvctl stop listener [-l ] [-n ] [-f]

Usage: srvctl stop scan [-i ] [-f]

Usage: srvctl stop scan_listener [-i ] [-f]

Usage: srvctl stop oc4j [-f] [-v]

Usage: srvctl stop home -o -s -n [-t ] [-f]

Usage: srvctl stop filesystem -d [-n ] [-f]

Usage: srvctl stop diskgroup -g [-n ""] [-f]

Usage: srvctl stop gns [-n ] [-f] [-v]

Usage: srvctl stop cvu [-f]

For detailed help on each command and object and its options use:

srvctl

-h

If you want to check if the database is running you can run:

ps -ef | grep smon

oracle 246196 250208 0 14:29:11 pts/0 0:00 grep smon

If you want to check if the database listeners are running you can run:

ps -ef | grep lsnr

root 204886 229874 0 14:30:07 pts/0 0:00 grep lsnr

Here the listeners are running:

ps -ef | grep lsnr

oracle 282660 1 0 14:07:34 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER_SCAN2 -inherit

oracle 299116 250208 0 14:30:00 pts/0 0:00 grep lsnr

oracle 303200 1 0 14:23:44 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER_SCAN1 -inherit

oracle 315432 1 0 14:07:35 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER_SCAN3 -inherit

oracle 323626 1 0 14:07:34 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER -inherit

If you want to check if any clusterware component is running you can run:

ps -ef | grep crs

root 204842 229874 0 14:27:19 pts/0 0:00 grep crs

Here the clusterware components (resources) are running:

ps -ef | grep crs

root 155856 1 1 14:05:47 - 0:22 /oracle/grid/crs/11.2/bin/ohasd.bin reboot

root 159940 1 0 14:07:08 - 0:01 /oracle/grid/crs/11.2/bin/oclskd.bin

oracle 221270 1 0 14:06:43 - 0:02 /oracle/grid/crs/11.2/bin/gpnpd.bin

root 225322 1 0 14:06:45 - 0:02 /oracle/grid/crs/11.2/bin/cssdmonitor

oracle 229396 1 0 14:06:41 - 0:00 /oracle/grid/crs/11.2/bin/gipcd.bin

oracle 233498 1 0 14:06:41 - 0:00 /oracle/grid/crs/11.2/bin/mdnsd.bin

root 253952 1 0 14:06:46 - 0:01 /oracle/grid/crs/11.2/bin/orarootagent.bin

root 258060 1 0 14:06:59 - 0:00 /oracle/grid/crs/11.2/bin/octssd.bin reboot

root 262150 1 0 14:06:47 - 0:00 /bin/sh /oracle/grid/crs/11.2/bin/ocssd

oracle 270344 262150 1 14:06:47 - 0:11 /oracle/grid/crs/11.2/bin/ocssd.bin

oracle

274456 156062 0 14:07:10 - 0:00 /oracle/grid/crs/11.2/bin/evmlogger.bin

-o /oracle/grid/crs/11.2/evm/log/evmlogger.info -l

/oracle/grid/crs/11.2/evm/log/evmlogger.log

oracle 282660 1 0 14:07:34 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER_SCAN2 -inherit

root 286742 1 6 14:07:17 - 0:36 /oracle/grid/crs/11.2/bin/orarootagent.bin

oracle 303200 1 1 14:23:44 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER_SCAN1 -inherit

oracle 315432 1 0 14:07:35 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER_SCAN3 -inherit

oracle 323626 1 0 14:07:34 - 0:00 /oracle/grid/crs/11.2/bin/tnslsnr LISTENER -inherit

oracle 156062 1 0 14:07:01 - 0:02 /oracle/grid/crs/11.2/bin/evmd.bin

root 229692 1 0 14:06:46 - 0:02 /oracle/grid/crs/11.2/bin/cssdagent

oracle 233762 1 0 14:06:40 - 0:01 /oracle/grid/crs/11.2/bin/oraagent.bin

oracle 246226 250208 0 14:32:34 pts/0 0:00 grep crs

oracle 254218 1 0 14:06:53 - 0:01 /oracle/grid/crs/11.2/bin/diskmon.bin -d -f

root 258554 1 0 14:07:01 - 0:09 /oracle/grid/crs/11.2/bin/crsd.bin reboot

oracle 270612 1 0 14:07:28 - 0:03 /oracle/grid/crs/11.2/bin/oraagent.bin

REMARK: the database listeners are cluster resources and not database resources !

此外给大家推荐几个日常的启动关闭命令:

其他管理维护指南

一.通过srvctl管理

1.关闭RAC数据库服务顺序

[oracle@dbp ~]$ srvctl stop database -d RACwh

[oracle@dbp ~]$ srvctl stop asm -n RAC01

[oracle@dbp ~]$ srvctl stop asm -n RAC02

[oracle@dbp ~]$ srvctl stop nodeapps -n RAC01

[oracle@dbp ~]$ srvctl stop nodeapps -n RAC02

2.启动RAC数据库服务顺序

[oracle@RAC01 ~]$ srvctl start nodeapps -n RAC01

[oracle@RAC01 ~]$ srvctl start nodeapps -n RAC02

[oracle@RAC01 ~]$ srvctl start asm -n RAC01

[oracle@RAC01 ~]$ srvctl start asm -n RAC02

[oracle@RAC01 ~]$ srvctl start database -d RACwh

3.其他命令

实例管理

[oracle@RAC01 ~]$ srvctl status instance -d RACwh -i RACwh1

[oracle@RAC01 ~]$ srvctl stop instance -d RACwh -i RACwh1

[oracle@RAC01 ~]$ srvctl start instance -d RACwh -i RACwh1

监听管理

[oracle@RAC01 ~]$ srvctl status listener -n RAC01

[oracle@RAC01 ~]$ srvctl stop listener -n RAC01

[oracle@RAC01 ~]$ srvctl start listener -n RAC01

二.通过crsctl工具管理

[oracle@RAC01 ~]$ crs_stat -t

[oracle@RAC01 ~]$ crs_stat

[oracle@RAC01 ~]$ crs_start -all

[oracle@RAC01 ~]$ crs_stop -all

[oracle@RAC01 ~]$ crs_stop "ora. RACwh.db"

crs_stat命令在oracle11g后逐渐趋于废弃,如下即可明白:

[grid@RAC02 ~]$ crs_stat -h

This command is deprecated and has been replaced by 'crsctl status resource'

This command remains for backward compatibility only

Usage: crs_stat [resource_name [...]] [-v] [-l] [-q] [-c cluster_member]

crs_stat [resource_name [...]] -t [-v] [-q] [-c cluster_member]

crs_stat -p [resource_name [...]] [-q]

crs_stat [-a] application -g

crs_stat [-a] application -r [-c cluster_member]

crs_stat -f [resource_name [...]] [-q] [-c cluster_member]

crs_stat -ls [resource_name [...]] [-q]

归档设置:

1、分别创建两个实例的ASM目录

任意一个实例,grid用户下:

# su - grid

[grid@oeltan1 ~]$ sqlplus "/as sysasm"

SQL> select name from V$asm_diskgroup;

NAME

--------------------------------------------------------------------------------

CRS

DATA

FRA

SQL> alter diskgroup FRA add directory '+FRA/ORCL';

SQL> alter diskgroup FRA add directory '+FRA/ORCL/ARCH1';

SQL> asmcmd;

还可以通过asmcmd创建目录:

ASMCMD> pwd

+FRA/ORCL

ASMCMD> mkdir ARCH2

2、修改归档参数

任意一个实例,oracle用户下:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=+FRA/ORCL/ARCH1' SCOPE=both SID='orcl1';

ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=+FRA/ORCL/ARCH2' SCOPE=both SID='orcl2';

3、两个节点上分别都执行:

SQL> SHUTDOWN IMMEDIATE;

SQL> STARTUP MOUNT;

SQL> ALTER DATABASE ARCHIVELOG;

SQL> ALTER DATABASE OPEN;

--修改一个实例时,另一个实例不能在open状态,否则报ORA-01126: database must be mounted in this instance and not open in any

4、分别检查:

SQL> archive log list

Database log mode Archive Mode

Automatic archival Enabled

Archive destination +FRA/orcl/arch1

Oldest online log sequence 14

Next log sequence to archive 15

Current log sequence 15

SQL> archive log list

Database log mode Archive Mode

Automatic archival Enabled

Archive destination +FRA/orcl/arch2

Oldest online log sequence 6

Next log sequence to archive 7

Current log sequence 7

具体描述可查看连接:

http://blog.csdn.net/tianlesoftware/article/details/8435772